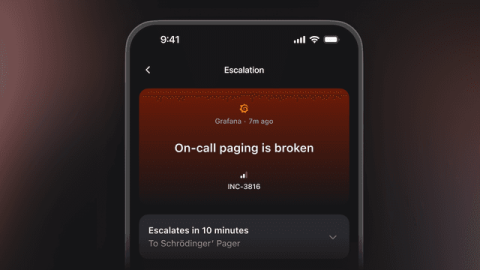

How we page ourselves if incident.io goes down

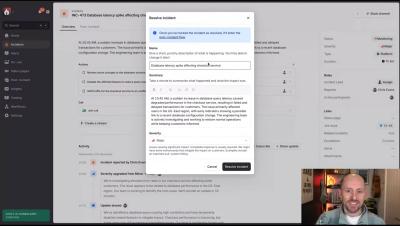

Picture this: your alerting system needs to tell you it's broken. Sounds like a paradox, right? Yet that’s exactly the situation we face as an incident management company. We believe strongly in using our own products - after all, if we don’t trust ourselves to be there when it matters most, why should the thousands of engineers who rely on us every day? However, this poses an obvious challenge.