Operations | Monitoring | ITSM | DevOps | Cloud

Shipa

Your First Shipa Cloud Deployment

After registering for Shipa Cloud or installing Shipa on your own infrastructure, you are now ready to deploy your first application. The beauty of Shipa is that in the spectrum of source code to a built image, Shipa can help you get these applications into the wild.

Building a successful Platform Engineering team

We see an increase in the number of companies building internal platform engineering teams. With this increase, we have seen some successful and some not-so-successful approaches. The goal of this article is to go through some of the practices we see out there.

Shipa Cloud with Your Minikube Cluster

Embracing any new technology stack can certainly be a journey. No matter if this is your first time using Kubernetes or you have been on the Google Borg Team, getting up and started with Shipa Cloud is a breeze. You can bring your own Kubernetes Cluster and sign up for a Shipa Cloud account and you are well on your way to Application as Code excellence. Leveraging minikube is a free way to take a look at Shipa Cloud.

Implementing a dashboard for your Kubernetes applications

Kubernetes application dashboard

Giving developers a portal they can use to understand application dependencies, ownership, and more has never been more critical. As you scale your Kubernetes adoption, you want to make sure you avoid service sprawl, and if not done early, application support will become a nightmare.

Policy as code for Kubernetes with Terraform

As you scale microservices adoption in your organization, the chances are high that you are managing multiple clusters, different environments, teams, providers, and different applications, each with its own set of requirements. As complexity increases, the question is: How do you scale policies without scaling complexity and the risk of your applications getting exposed?

Deploying microservice apps on Kubernetes using Terraform

Terraform is a popular choice among DevOps and Platform Engineering teams as engineers can use the tool to quickly spin up environments directly from their CI/CD pipelines.

Data applications with Kubernetes and Snowflake

Data application developers using Snowflake as the data warehouse and who are new to Kubernetes, spinning up a single cluster on their laptop and deploying their first application can seem deceptively simple. As they start deploying data-driven applications using microservices and Kubernetes in production, the difficulty increases exponentially. It quickly throws the developer into a kind of configuration hellscape that drives productivity down for many data engineering teams.

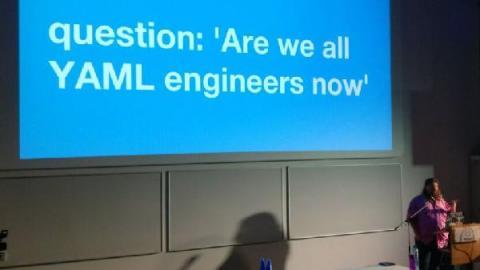

How deep is your love for YAML?

There is no doubt that YAML has developed a reputation for being a painful way to define and deploy applications on Kubernetes. The combination of semantics and empty spaces can drive some developers crazy. As Kubernetes advances, is it time for us to explore different options that can support both DevOps and Developers in deploying and managing applications on Kubernetes?