Operations | Monitoring | ITSM | DevOps | Cloud

InfluxData

Data lakes vs data warehouses explained

Getting Real-Time With InfluxDB

Mage for Anomaly detection with InfluxDB and Half-space Trees

Any existing InfluxDB user will notice that InfluxDB underwent a transformation with the release of InfluxDB 3.0. InfluxDB v3 provides 45x better write throughput and has 5-25x faster queries compared to previous versions of InfluxDB.

Bringing it all together: Speed, performance, and efficiency in InfluxDB 3.0

For most of the past year, we here at InfluxData focused on shipping the latest version of InfluxDB. To date, we launched three commercial products (InfluxDB Cloud Serverless, InfluxDB Cloud Dedicated, and InfluxDB Clustered), with more open source options on the way. All the while, we claimed that this latest version of InfluxDB surpasses anything we built before.

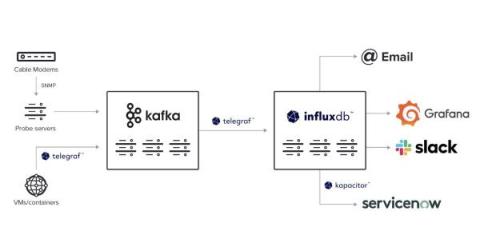

How WOW! Modernized Legacy Infrastructure Monitoring with InfluxDB and Kafka

With over 500,000 residential, business, and wholesale customers across multiple markets in the United States, WideOpenWest (WOW!) is one of the United States’ largest broadband providers. They aim to connect homes and businesses to the world with fast and reliable internet, TV, and phone services.

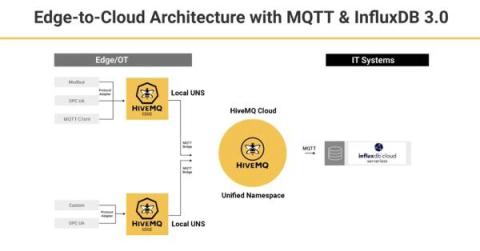

Webinar Recap: Build an Edge-to-Cloud Architecture Using MQTT and InfluxDB

Industrial IoT (IIoT) machines and sensors generate valuable time series data. It’s impossible to derive the insights necessary to inform decisions as a company to produce or operate more efficiently without sending operational technology (OT) data to informational technology (IT) systems.

Querying Arrow tables with DataFusion in Python

InfluxDB v3 allows users to write data at a rate of 4.3 million points per second. However, an incredibly fast ingest rate like this is meaningless without the ability to query that data. Apache DataFusion is an “extensible query execution framework, written in Rust, that uses Apache Arrow as its in-memory format.” It enables 5–25x faster query responses across a broad range of query types compared to previous versions of InfluxDB that didn’t use the Apache ecosystem.

Flight, DataFusion, Arrow, and Parquet: Using the FDAP Architecture to build InfluxDB 3.0

This article coins the term “FDAP stack”, explains why we used it to build InfluxDB 3.0, and argues that it will enable and power a generation of analytics applications in the same way that the LAMP stack enabled and powered a generation of interactive websites (by the way we are hiring!).

The Advantage of Cold Storage in InfluxDB

Imagine, if you will, having hundreds of devices that you need to monitor. All these devices generate data at sub-second intervals, and you need all that high fidelity data for historical analysis to feed machine learning models. Storing all that data can get really expensive, really fast. When that happens, you must decide what’s more important: keeping all your data or sacrificing insights and analysis. It may not be a big stretch of the imagination for many readers.