Operations | Monitoring | ITSM | DevOps | Cloud

Gremlin

Five Trends from SREcon Americas 2023

Last week, over five hundred SREs gathered in Santa Clara to share the latest research, tips, tricks, best practices, and more for site reliability engineering. They were joined by some of the biggest names in the reliability space. And, yes, Gremlin was there to answer any and all questions about chaos engineering and proactive reliability. After three days of great conversations and insightful talk, let’s take a look at some of the themes we heard weaving through SRECon.

How Gremlin helps you meet Google's Infrastructure Reliability standards

In January of 2023, Google released its infrastructure reliability guide, which provides guidelines on how to build high-availability applications in Google Cloud. While it's written for Google Cloud, it provides some excellent general-purpose information on how to architect reliable applications on any cloud provider, including: In this blog, we'll explain each of these factors and how you can use Gremlin to ensure you're meeting your reliability requirements.

Testing doesn't stop at staging

Imagine a perfect world where software releases ship bug-free. Developers write perfect code the first time, all tests pass without issues, operations teams effortlessly deploy builds to production, and customers never experience defects. Everyone's happy, and the Engineering team can focus exclusively on building and delivering features. Of course, we don't live in a perfect world.

The KPIs of improved reliability

For many businesses, prioritizing reliability is an ongoing challenge. Building reliable systems and services is critical for growing revenue and customer trust, but other initiatives—like building new products and features—often take precedence since they provide a clearer and more immediate return. That's not to say reliability doesn't have clear value, but proving this value to business leaders can be tricky.

How to test for expired TLS/SSL certificates using Gremlin

Transport Layer Security (TLS), and its preceding protocol, Secure Sockets Layer (SSL), are essential to the modern Internet. Encrypting network communications using TLS protects users and organizations from publicly exposing in-transit data to third parties. This is especially important for the web, where TLS secures HTTP traffic (HTTPS) between backend servers and customers’ browsers.

How to integrate your load testing tool with Gremlin Reliability Management

How reliability testing and load testing are complementary

How can you tell if your systems are reliable when under load? A common answer is to open your observability dashboards, wait for a high-traffic event (like Black Friday), and cross your fingers. While this approach is certainly effective, it's far from ideal. Without proactive reliability and load testing, we have no idea if a system will hold up to real-world usage patterns, which could mean a production outage at the worst possible time.

How to identify and map service dependencies

Modern applications are a web of interdependent services. As applications grow in size and complexity, and as more engineering teams adopt service-based architectures like microservices, this web becomes deeper and denser. Eventually, keeping track of the interdependencies between services becomes a complex and time-consuming task in and of itself. In addition, if any of these dependencies fails, it can have cascading impacts on the rest of your services and on the application as a whole.

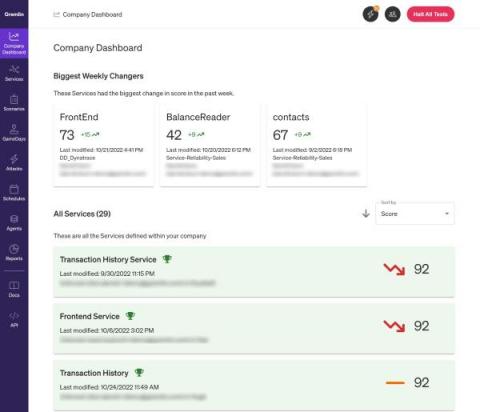

Managing and improving reliability using Gremlin's Reliability Dashboard

Part of a successful reliability program is being able to monitor and review your progress toward improving reliability. Being able to run tests on services is a big part of it, but how can you tell you're making progress if you can only see your latest test results? There should be a way to track improvements or regressions in your reliability testing practice across your organization in a way that's easy to digest. That's where the Reliability Dashboard comes in.