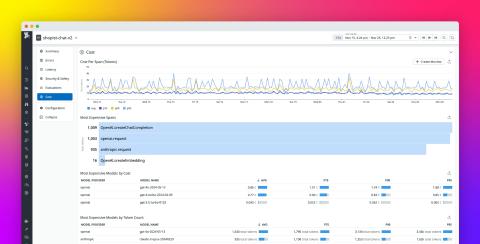

Track AI Costs with Datadog Cloud Cost Management for OpenAI! Learn More on TMiDD! #AI #CloudCost

On This Month in Datadog, we’re spotlighting Datadog Cloud Cost Management for OpenAI, which enables you to break down costs by project and organization, as well as by individual model and their token consumption.