Operations | Monitoring | ITSM | DevOps | Cloud

May 2023

How Netdata's ML-based Anomaly Detection Works

How does Netdata's machine learning (ML) based anomaly detection actually work? Read on to find out!

Revolutionizing Operations Centers with Netdata's Real-time Monitoring Solution

In today's fast-paced digital landscape, 24-hour operations centers play a crucial role in managing and monitoring large-scale infrastructures. These centers must be equipped with an effective monitoring solution that addresses their unique needs, enabling them to respond quickly to incidents and maintain optimal system performance. Netdata, a comprehensive monitoring solution, has been designed to meet these critical requirements with its advanced capabilities and recent enhancements.

The Future of Infrastructure Monitoring: Scalability, Automation, and AI

In this blog post, we will explore the importance of scalability, automation, and AI in the evolving landscape of infrastructure monitoring. We will examine how Netdata's innovative solution aligns with these emerging trends, and how it can empower organizations to effectively manage their modern IT infrastructure.

Monitoring Multi-Cloud and Hybrid-Cloud Infrastructures: Challenges and Best Practices

The advent of multi-cloud and hybrid-cloud architectures has created new opportunities for organizations to leverage best-in-class features from various cloud service providers. However, these complex environments present their own unique challenges, especially when it comes to monitoring and managing performance.

Navigating the Path to Cloud Migration: Key Challenges and Best Practices

Embarking on a cloud migration journey? Grasp the obstacles and arm yourself with best practices for a smooth transition. Success lies in understanding, planning, and adapting. As we continue to advance further into the 21st century, businesses of all sizes are finding themselves in the midst of a digital revolution.

Mastering Cloud Optimization: Strategies for Enhancing Performance and Reducing Costs

Unlock the full potential of your cloud investment! Discover strategies to enhance performance and reduce costs. In the dynamic world of cloud computing, optimization isn't just about cost reduction. It involves a fine balance between managing costs and maximizing value while ensuring efficient resource allocation.

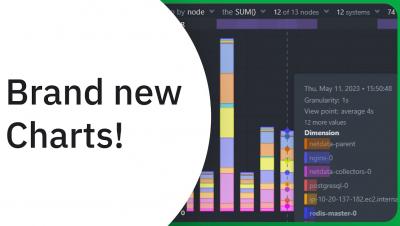

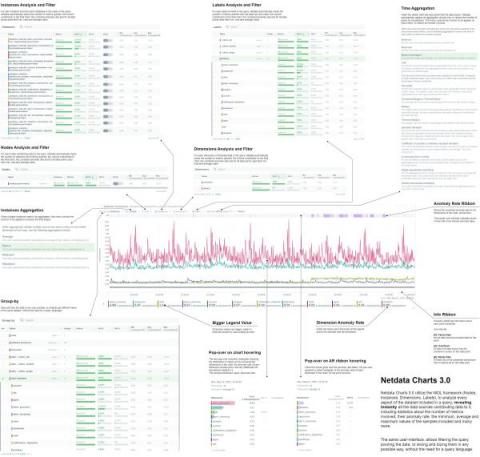

Introducing Charts v3.0: Slice, dice, filter and pivot the data in any way possible!

Transforming Monitoring with a Machine Learning-First Approach

Unlocking the full potential of monitoring through ML integration, anomaly detection, and innovative scoring engines. Machine Learning has been making waves in various industries, but its adoption in the monitoring and observability space has been slower than expected. Many “ML” features remain gimmicky and do not provide actual real world value to users that encourages their further use.

The Future of Monitoring is Automated and Opinionated

So, you think you monitor your infra? As humanity increasingly relies on technology, the need for reliable and efficient infrastructure monitoring solutions has never been greater. However, most businesses don't take this seriously. They make poor choices that soon trap their best talent, the people who should be propelling them ahead of their competition.

Release 1.39.0: A new era for monitoring charts.

Another release of the Netdata Monitoring solution is here!

Release 1.39.0 - Charts 3.0, Windows Support, Virtual Nodes, Custom Labels, and more

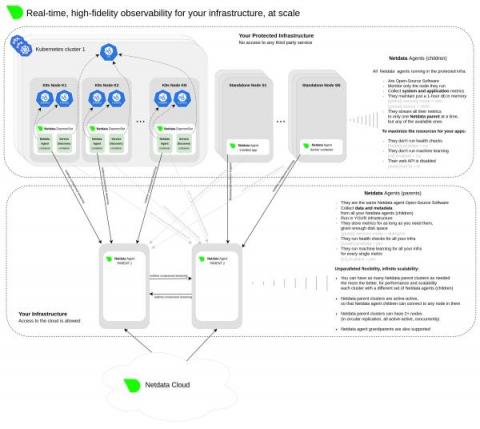

Monitoring to Infinity and Beyond - How Netdata Scales Without Limits

Scalability is crucial for monitoring systems as it ensures that they can accommodate growth, maintain performance, provide flexibility, optimize costs, enhance fault tolerance, and support informed decision-making, all of which are critical for effective infrastructure management.

Unlock the Secrets of Kernel Memory Usage

The mem.kernel chart in Netdata provides insight into the memory usage of various kernel subsystems and mechanisms. By understanding these dimensions and their technical details, you can monitor your system's kernel memory usage and identify potential issues or inefficiencies. Monitoring these dimensions can help you ensure that your system is running efficiently and provide valuable insights into the performance of your kernel and memory subsystem.

Monitoring Disks: Understanding Workload, Performance, Utilization, Saturation, and Latency

Netdata provides a comprehensive set of charts that can help you understand the workload, performance, utilization, saturation, latency, responsiveness, and maintenance activities of your disks. In this blog we will focus on monitoring disks as block devices, not as filesystems or mount points. The Disks section in the Overview tab contains all the charts that are mentioned in this blog post.

Understanding System Processes States

The different states of system processes are essential to understanding how a computer system works. Each state represents a specific point in a process's life cycle and can impact system performance and stability.

Understanding Linux CPU Consumption, Load, and Pressure for Performance Optimization

As a system administrator, understanding how your Linux system's CPU is being utilized is crucial for identifying bottlenecks and optimizing performance. In this blog post, we'll dive deep into the world of Linux CPU consumption, load, and pressure, and discuss how to use these metrics effectively to identify issues and improve your system's performance.

Understanding Context Switching and Its Impact on System Performance

Context switching is the process of switching the CPU from one process, task or thread to another. In a multitasking operating system, such as Linux, the CPU has to switch between multiple processes or threads in order to keep the system running smoothly. This is necessary because each CPU core without hyperthreading can only execute one process or thread at a time.

Swap Memory - When and How to Use It on Your Production Systems or Cloud-Provided VMs

Swap memory, also known as virtual memory, is a space on a hard disk that is used to supplement the physical memory (RAM) of a computer. The swap space is used when the system runs out of physical memory, and it moves less frequently accessed data from RAM to the hard disk, freeing up space in RAM for more frequently accessed data. But should swap memory be enabled on production systems and cloud-provided virtual machines (VMs)? Let's explore the pros and cons.

Understanding Interrupts, Softirqs, and Softnet in Linux

Interrupts, softirqs, and softnet are all critical parts of the Linux kernel that can impact system performance. In this blog post, we'll explore their usefulness, and discuss how to monitor them using Netdata for both bare-metal servers and VMs.