Operations | Monitoring | ITSM | DevOps | Cloud

Latest News

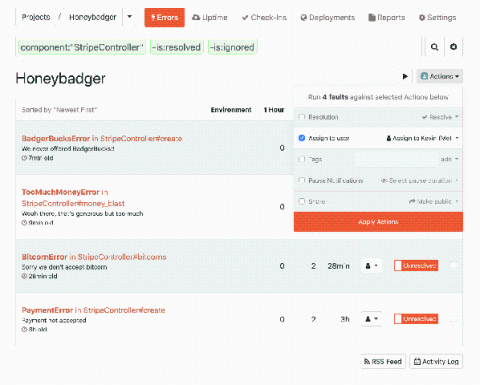

Honeybadger Actions

Have you ever wanted to update all your errors at once, or set defaults for incoming errors? Well, we are releasing some helpful tools for error management that we call Honeybadger Actions.

How We Used JMH to Benchmark Our Microservices Pipeline

At LogicMonitor, we are continuously improving our platform with regards to performance and scalability. One of the key features of the LogicMonitor platform is the capability of post-processing the data returned by monitored systems using data not available in the raw output, i.e. complex datapoints. As complex datapoints are computed by LogicMonitor itself after raw data collection, it is one of the most computationally intensive parts of LogicMonitor’s metrics processing pipeline.

How SLIs Help You Understand Users' Needs

What Are the Hardest Parts of Kubernetes to Learn?

Many enterprises have already adopted Kubernetes or have a Kubernetes migration plan in place, making it clear that the platform is here to stay. While it provides a lot of benefits to its users, to take advantage of them, you need to thoroughly learn Kubernetes and how it works in production. Typically, the most difficult aspects of Kubernetes are learned through experience solving real-world problems.

5 Ways to Improve Your Dev Team Velocity

Velocity, much like the pulse rate or oxygen level of an individual, is an important measure of health for your development team. A low velocity score for recent sprints limits your team's options for delivering value. Sustained failure to deliver to stakeholders can erode trust with those stakeholders quickly. But how do you know exactly what your velocity is and how you can improve it?

We've Raised $27 Million in New Funding, Here's How We're Investing It

Today we’re announcing a new round of funding that brings an additional $27M to help us invest in growing and building Codefresh. When we started, we wanted to revolutionize the way people build and deploy software with continuous integration and delivery. I’m proud to say that we were the first platform to see the game-changing value of containers and Kubernetes.

Enhanced visibility for cost optimization across your Kubernetes clusters

Cloud cost management is essential for business success, especially in times of business and global volatility. However, gaining quick and clear visibility into all your containerized workloads so you can control and improve the way they are being used, has not been so simple to date. At Spot, we have been addressing these issues and recently introduced cost analysis and showback tools for Kubernetes within our Ocean solution.

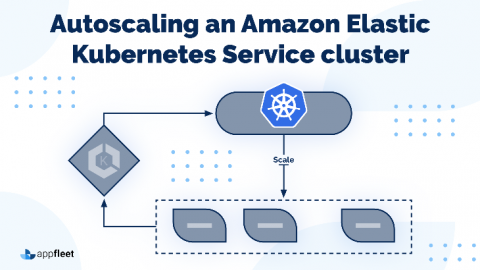

Autoscaling an Amazon Elastic Kubernetes Service cluster

In this article we are going to consider the two most common methods for Autoscaling in EKS cluster: The Horizontal Pod Autoscaler or HPA is a Kubernetes component that automatically scales your service based on metrics such as CPU utilization or others, as defined through the Kubernetes metric server. The HPA scales the pods in either a deployment or replica set, and is implemented as a Kubernetes API resource and a controller.

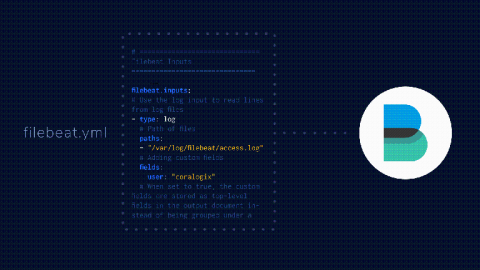

Filebeat Configuration Best Practices Tutorial

In this post, we will cover some of the main use cases Filebeat supports and we will examine various Filebeat configuration use cases. Filebeat, an Elastic Beat that’s based on the libbeat framework from Elastic, is a lightweight shipper for forwarding and centralizing log data. Installed as an agent on your servers, Filebeat monitors the log files or locations that you specify, collects log events, and forwards them either to Elasticsearch for indexing or to Logstash for further processing.