Release 1.16.0: Smarter binaries and built-in TLS

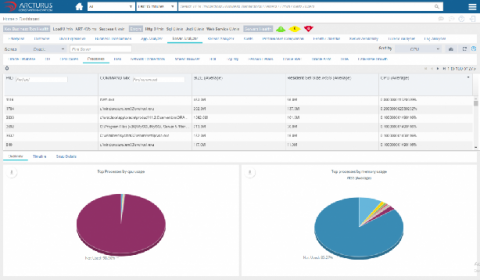

We’re excited to launch release v1.16.0 of the open-source Netdata monitoring agent, which delivers real-time health monitoring and performance troubleshooting to nearly any system or application. This release also contains 40 bug fixes, 31 improvements, and 20 documentation updates—if you’d like to see the full list, check out the full release notes.