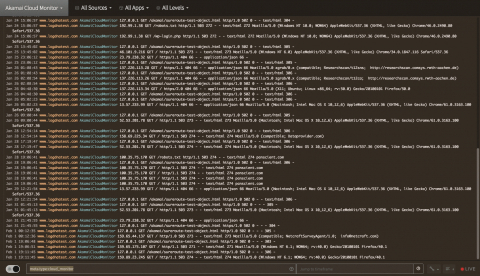

Server Monitoring with Logz.io and the ELK Stack

In a previous article, we explained the importance of monitoring the performance of your servers. Keeping tabs on metrics such as CPU, memory, disk usage, uptime, network traffic and swap usage will help you gauge the general health of your environment as well as provide the context you need to troubleshoot and solve production issues.