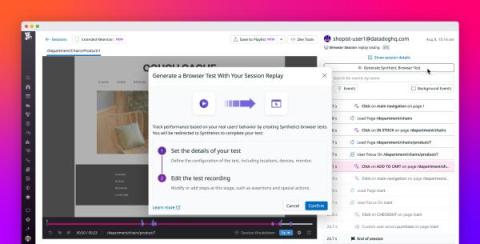

Create browser tests directly from Datadog RUM Session Replay

Testing is a key part of application development and helps you maintain a reliable experience for your users. But the process can be difficult to scale and is often siloed to a single team or individual that does not have broad knowledge of your application’s UI. This can lead to organizations investing in sizable test suites that do not accurately represent real user behavior.