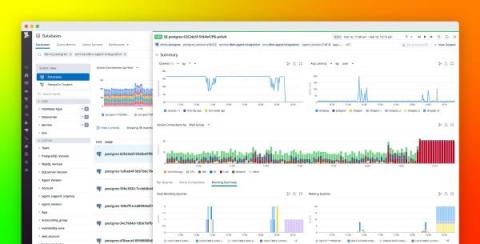

Monitoring RabbitMQ performance with Datadog

In Part 2 of this series, we’ve seen how RabbitMQ ships with tools for monitoring different aspects of your application: how your queues handle message traffic, how your nodes consume memory, whether your consumers are operational, and so on. While RabbitMQ plugins and built-in tools give you a view of your messaging setup in isolation, RabbitMQ weaves through the very design of your applications.