Operations | Monitoring | ITSM | DevOps | Cloud

Logging

The latest News and Information on Log Management, Log Analytics and related technologies.

Network Security: The Journey from Chewiness to Zero Trust Networking

Network security has changed a lot over the years, it had to. From wide open infrastructures to tightly controlled environments, the standard practices of network security have grown more and more sophisticated. This post will take us back in time to look at the journey that a typical network has been on over the past 15+ years. From a wide open, “chewy” network, all the way to zero trust networking. Let’s get started.

A Practical Guide to Logstash: Parsing Common Log Patterns with Grok

In a previous post, we explored the basic concepts behind using Grok patterns with Logstash to parse files. We saw how versatile this combo is and how it can be adapted to process almost anything we want to throw at it. But the first few times you use something, it can be hard to figure out how to configure for your specific use case.

Amazon: NOT OK - why we had to change Elastic licensing

We recently announced a license change: Blog, FAQ. We posted some additional guidance on the license change this morning. I wanted to share why we had to make this change. This was an incredibly hard decision, especially with my background and history around Open Source. I take our responsibility very seriously. And to be clear, this change most likely has zero effect on you, our users. It has no effect on our customers that engage with us either in cloud or on premises.

How to Troubleshoot AWS Lambda Log Collection in Coralogix

AWS Lambda is a serverless compute service that runs your code in response to events and automatically manages the underlying compute resources for you. The code that runs on the AWS Lambda service is called Lambda functions, and the events the functions respond to are called triggers. Lambda functions are very useful for log collection (think of log arrival as a trigger), and Coralogix makes extensive use of them in its AWS integrations.

Cloud Profiler provides app performance insights, without the overhead

Do you have an application that’s a little… sluggish? Cloud Profiler, Google Cloud’s continuous application profiling tool, can quickly find poor performing code that slows your app performance and drives up your compute bill. In fact, by helping you find the source of memory leaks and other errors, Profiler has helped some of Google Cloud’s largest accounts reduce their CPU consumption by double-digit percentage points.

observIQ Cloud: Log Management Made Simple

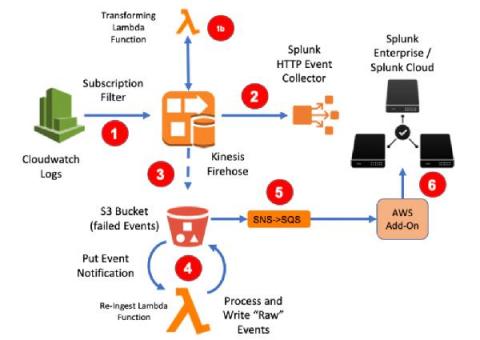

AWS Firehose to Splunk - Two Easy Ways to Recover Those Failed Events

With Kinesis Firehose being Splunk’s preferred option when collecting logs at scale from AWS Cloudwatch Logs, we’ve seen plenty of posts on setting this up, automation and examples on transforming event content. But what about when things go wrong?

Multi-Cloud Archive & Restore: Azure Blob Storage and AWS S3 Support

Logz.io has recently launched its Smart Tiering solution, which gives you the flexibility to place data on different tiers to optimize cost, performance and availability. Our mission has been to make Smart Tiering a multi-cloud and multi-region service. As part of this launch, we are glad to announce that the Historical Tier now supports Microsoft Azure Blob Storage, alongside AWS S3.

Kusto: Table Joins and the Let Statement

In this article I’m going to discuss table joins and the let statement in Log Analytics. Along with custom logs, these are concepts that really had me scratching my head for a long time, and it was a little bit tricky to put all the pieces together from documentation and other people’s blog posts. Hopefully this will help anyone else out there that still has unanswered questions on one of these topics.