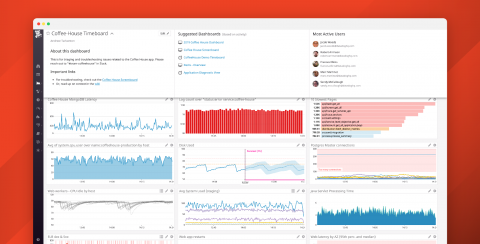

Monitor your customer data infrastructure with Segment and Datadog

This is a guest post by Noah Zoschke, Engineering Manager at Segment. Segment is the customer data infrastructure that makes it easy for companies to clean, collect, and control their first-party customer data. At Segment, our ultimate goal is to collect data from Sources (e.g., a website or mobile app) and route it to one or more Destinations (e.g., Google Analytics and AWS Redshift) as quickly and reliably as possible.