Dash 2019: Guide to Datadog's newest announcements

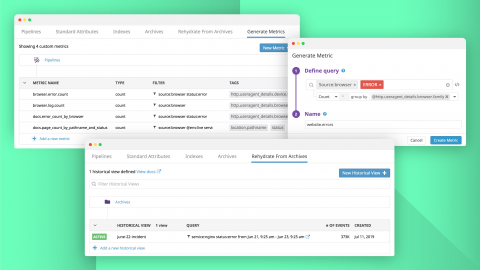

At Dash 2019, we are excited to share a number of new products and features on the Datadog platform. With the addition of Network Performance Monitoring, Real User Monitoring, support for collecting browser logs, and single-pane-of-glass visibility for serverless environments, Datadog now provides even broader coverage of the modern application stack, from frontend to backend.