Operations | Monitoring | ITSM | DevOps | Cloud

Honeycomb

5 Ways You Can Utilize Observability to Make Your Next Migration Easier

When people hear the word “migration,” they typically think about migrating from on-prem to the cloud. In reality, companies do migrations of varying types and sizes all the time. However, many teams delay making critical migrations or technical upgrades because they don’t have the proper tools and frameworks to de-risk the process.

How Traceloop Leverages Honeycomb and LLMs to Generate E2E Tests

At Traceloop, we’re solving the single thing engineers hate most: writing tests for their code. More specifically, writing tests for complex systems with lots of side effects, such as this imaginary one, which is still a lot simpler than most architectures I’ve seen: As you can see, when an API call is made to a service, there are a lot of things happening asynchronously in the backend; some are even conditional.

Observing the Future: The Power of Observability During Development

Just when you thought everything that could be shifted left has been shifted left, we’re sorry to say you’ve missed something: observability. Modern software development—where code is shipped fast and fixed quickly—simply can’t happen without building observability in before deployments happen. Teams need to see inside the code and CI/CD pipelines before anything ships, because finding problems early makes them easier to fix.

All the Hard Stuff Nobody Talks About when Building Products with LLMs

Earlier this month, we released the first version of our new natural language querying interface, Query Assistant. People are using it in all kinds of interesting ways! We’ll have a post that really dives into that soon. However, I want to talk about something else first. There’s a lot of hype around AI, and in particular, Large Language Models (LLMs).

5 Ways to Increase Release Velocity with Observability

The pressure on today’s development teams is real: innovate, release quickly, and then do it all again, only faster. Is it any surprise that studies are showing 83% of software developers are feeling burnout? We’re here to remind you that it doesn’t have to be this way.

Developing with OpenAI and Observability

Honeycomb recently released our Query Assistant, which uses ChatGPT behind the scenes to build queries based on your natural language question. It's pretty cool. While developing this feature, our team (including Tanya Romankova and Craig Atkinson) built tracing in from the start, and used it to get the feature working smoothly. Here's an example. This trace shows a Query Assistant call that took 14 seconds. Is ChatGPT that slow? Our traces can tell us!

5 Ways Honeycomb Saves Time, Money, and Sanity

There’s a reason everyone dreads debugging, especially in today’s complex cloud systems: it’s at the high stakes nexus of nervous senior management, overworked engineers, neverending rabbit holes, copious buckets of time, and fickle customers.

A Systematic Approach to Collaboration and Contributing to the Lattice Design System

The Honeycomb design team began work on Lattice in early 2021. Over several months, we worked to clean up and optimize typography, color, spacing, and many other product experience areas. We conducted an extensive audit of all components, documenting design inconsistencies and laying the foundation for a sustainable design system. However, a more extensive evaluation and audit were necessary before updating or developing components.

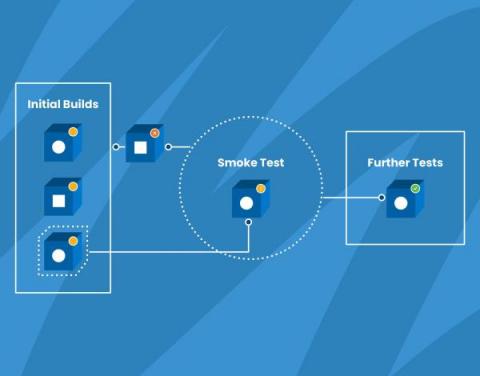

How We Use Smoke Tests to Gain Confidence in Our Code

Wikipedia defines smoke testing as “preliminary testing to reveal simple failures severe enough to, for example, reject a prospective software release.” Also known as confidence testing, smoke testing is intended to focus on some critical aspects of the software that are required as a baseline.