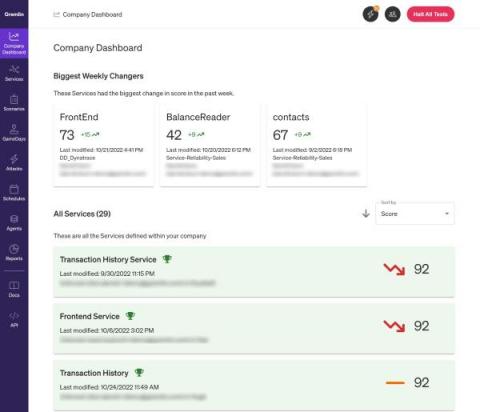

Managing and improving reliability using Gremlin's Reliability Dashboard

Part of a successful reliability program is being able to monitor and review your progress toward improving reliability. Being able to run tests on services is a big part of it, but how can you tell you're making progress if you can only see your latest test results? There should be a way to track improvements or regressions in your reliability testing practice across your organization in a way that's easy to digest. That's where the Reliability Dashboard comes in.