Guide to Logging Your IBM Cloud Resources with LogDNA

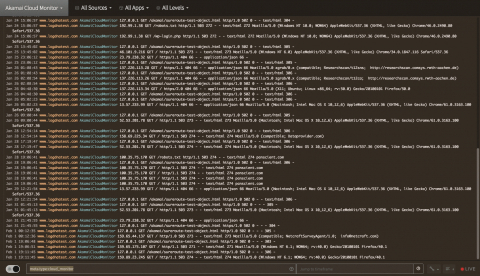

We hope you’re enjoying your time at IBM Think 2019 – thank you for dropping by to chat with our team (at booth 598) and now checking our blog. As promised, setting up modern logging for your Kubernetes clusters on IBM Cloud is really easy and in this article we’ll take a closer log at IBM Log Analysis with LogDNA and how to use it to log your cloud Kubernetes clusters.