Operations | Monitoring | ITSM | DevOps | Cloud

DevOps

The latest News and Information on DevOps, CI/CD, Automation and related technologies.

SysAdmin Day 2018: IT Ambush, Round 2

SysAdmin Day 2018: IT Ambush, Round 3

Prometheus vs. Graphite: Which Should You Choose for Time Series or Monitoring?

One of the key performance indicators of any system, application, product, or process is how certain parameters or data points perform over time. What if you want to monitor hits on an API endpoint or database latency in seconds? A single data point captured in the present moment won’t tell you much by itself. However, tracking that same trend over time will tell you much more, including the impact of change on a particular metric.

SysAdmin Day 2018: IT Ambush, Round 1

How to Succeed with ABM: Tips from Zenoss CMO Megan Lueders

Modernizing Development with Continuous Shipping

Ahoy there. Continuous shipping: a concept many companies talk about but never get around to implementing. Ever wonder why that is? In the first post of this three-part series, we’ll go over(board) the concept of continuous shipping and why it’s valuable. Parts two and three will deep-dive into the process implementation. Buckle that life jacket; it’s time to set sail.

Striking a balance between speed and quality in continuous delivery

Over the past few years, we have accelerated into a fast-paced ‘everything now’ era. Endless tech-powered innovations are giving customers more choices than ever before. When developing new products and features, continuous delivery and rapid software release cycles are now the norms. Enterprises have to adapt, improve, and deliver solutions faster to stay competitive and meet changing customer needs.

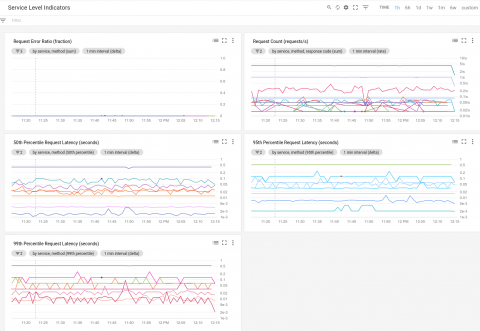

SRE fundamentals: SLIs, SLAs and SLOs

Next week at Google Cloud Next ‘18, you’ll be hearing about new ways to think about and ensure the availability of your applications. A big part of that is establishing and monitoring service-level metrics—something that our Site Reliability Engineering (SRE) team does day in and day out here at Google.

5 ways to make the most of Jira Software and Bitbucket

In a recent study of software development teams using Jira Software, we found those that integrate with Bitbucket release 14% faster than those that don’t. For many industries where the pace of change is rapid and the market incredibly competitive, it’s this speed that can separate the great products in the eyes of users from the merely “good” ones.