Operations | Monitoring | ITSM | DevOps | Cloud

Latest News

Import ECS Fargate into Spot Ocean

AWS Fargate is a serverless compute engine for containers that work with both Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS). With Fargate handling instance provisioning and scaling, users don’t have to worry about spinning up instances when their applications need resources. While this has many benefits, it’s not without its share of challenges which can limit its applicability to a wide variety of use cases.

Troubleshooting vs. Debugging

The life of a developer these days is more complicated than ever, as they are increasingly required to expand their knowledge across the stack, understand abstract concepts, and own their code end-to-end. A major (and very frustrating) part of a developer’s day is dedicated to fixing what they’ve built – scouring logs and code lines in search of a bug. This search becomes even harder in a distributed Kubernetes environment, where the number of daily changes can be in the hundreds.

Heroku vs AWS: What is the cheapest for your startup?

In today's digital age, the internet and computer technologies have become a part of our lives. Organizations are moving their applications to the cloud to gain benefits of flexibility and lower costs. Heroku and AWS are two popular cloud service providers. AWS is a cloud services platform offering computing power, database storage, content delivery, and many other functionalities. Users can choose individual features and services as required.

Kubernetes application dashboard

Giving developers a portal they can use to understand application dependencies, ownership, and more has never been more critical. As you scale your Kubernetes adoption, you want to make sure you avoid service sprawl, and if not done early, application support will become a nightmare.

How to Build and Run Your Own Container Images

The rise of containerization has been a revolutionary development for many organizations. Being able to deploy applications of any kind on a standardized platform with robust tooling and low overhead is a clear advantage over many of the alternatives. Viewing container images as a packaging format also allows users to take advantage of pre-built images, shared and audited publicly, to reduce development time and rapidly deploy new software.

How ORAN is moving the industry to a cloud-native model

Open RAN or ORAN is a game-changing Radio Access Network (RAN) evolution combining RAN functionality with cloud-native design, scale and automation. Legacy RAN was and is still intentionally designed using closed and proprietary architectures that locked operators to a particular vendor, for both radio and supporting hardware (baseband units). Now with ORAN, operators can not only decouple vendors, but also software from hardware, facilitating the migration to a cloud-native model.

Civo update - September 2021

Welcome to the Civo update for September 2021. In case you missed the big news... this week we successfully launched our first region in Frankfurt, Germany. Plus we announced our new strategic partner, THG Ingenuity, who invested $2 million into the company. This will allow us to quickly invest in our infrastructure, including more regions across the globe throughout the next 12 months.

Kubernetes, Give Me a Queue

A few months ago, we introduced a new messaging topology operator. As we noted in our announcement post, this new Operator—we use the upper-cased “Operator” to denote Kubernetes Operators vs. platform or service human operators—takes the concept of VMware Tanzu RabbitMQ infrastructure-as-code another step forward by allowing platform or service operators and developers to quickly create users, permissions, queues, and exchanges, as well as queue policies and parameters.

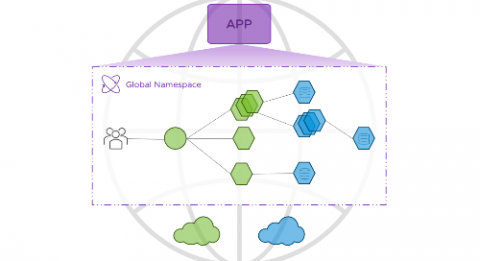

Application Resiliency for Cloud Native Microservices with VMware Tanzu Service Mesh

Modern microservices-based applications bring with them a new set of challenges when it comes to operating at scale across multiple clouds. While the goal of most modernization projects is to increase the velocity at which business features are created, with this increased speed comes the need for a highly flexible, microservices-based architecture. The result is that the architectural convenience created on day 1 by developers turns into a challenge for site reliability engineers (SREs) on day 2.