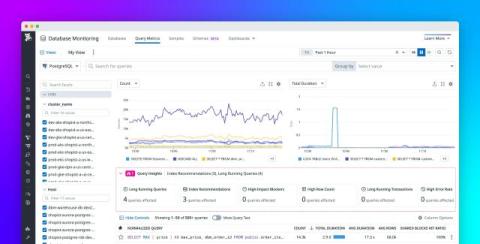

Optimize PostgreSQL performance with Datadog Database Monitoring

PostgreSQL is a widely used open source relational database that many organizations operate as a core part of their infrastructure stack. Because of their mission-critical nature, database-related issues can have outsize downstream impacts on user experience, service performance, and data retention, making it vital to identify and address problems quickly.