Operations | Monitoring | ITSM | DevOps | Cloud

Cloud

The latest News and Information on Cloud monitoring, security and related technologies.

Kubernetes vs YARN for scheduling Apache Spark

Spark is one of the most widely-used compute tools for big data analytics. It excels at real-time batch and stream processing, and powers machine learning, AI, NLP and data analysis applications. Thanks to its in-memory processing capabilities, Spark has risen in popularity. As Spark usage increases, the older Hadoop stack is on the decline with its various limitations that make it harder for data teams to realize business outcomes.

Cloud-Hosted of Cloud-Native? Discover Why Cloudsmith Was Born in the Cloud

Today, almost every service now is offered in a “Cloud” variant. But what does that really mean? Are all clouds services equal? It’s easy to see why so many vendors rush to add a Cloud edition/variant of established software they sell. Undoubtedly, there has been a move to Cloud services across the industry, as more and more organizations seek to take advantage of the higher reliability and lower total cost of ownership that Cloud platforms promise.

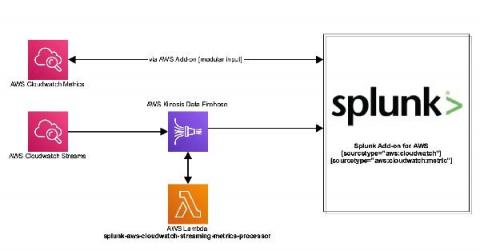

Stream Your AWS Services Metrics to Splunk

Amazon Web Services (AWS) recently announced the launch of CloudWatch Metric Streams. Cloudwatch Streams can stream metrics from a number of different AWS resources using Amazon Kinesis Data Firehose to target destinations. The new service is different from the current architecture. Instead of polling, metrics are delivered via an Amazon Kinesis Data Firehose stream. This is a highly scalable and far more efficient way to retrieve AWS service metrics.

IaaS, PaaS, SaaS - Sorting Through the Alphabet Soup of "as a Service"

A move to the cloud can seem daunting, especially when you’re unsure if IaaS, PaaS or SaaS is right for your business. Read on to crack the acronym code.

Understanding The AWS Shared Security Model

Whether you are new to AWS or have been to every re:Invent since 2012 you may have questions about cloud security and how it impacts your valuable technology and data. In particular, you might be wondering where AWS’s security responsibilities end and where yours begin? Which parts of the cloud can you rely on Amazon’s security team and technology to keep safe and which parts must you take care of?

SRE fundamentals 2021: SLIs vs. SLAs. vs SLOs

A big part of ensuring the availability of your applications is establishing and monitoring service-level metrics—something that our Site Reliability Engineering (SRE) team does every day here at Google Cloud. The end goal of our SRE principles is to improve services and in turn the user experience. The concept of SRE starts with the idea that metrics should be closely tied to business objectives. In addition to business-level SLAs, we also use SLOs and SLIs in SRE planning and practice.