Operations | Monitoring | ITSM | DevOps | Cloud

Latest Posts

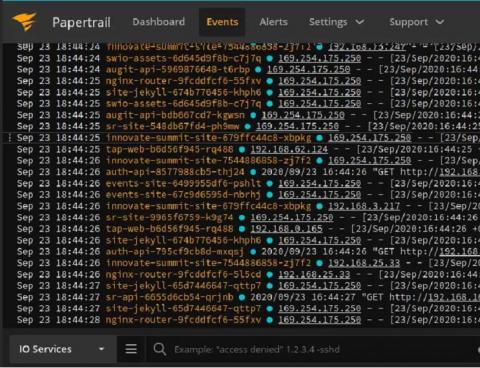

Quick and Easy Way to Implement Kubernetes Logging

How Our Latest Release Makes Your PagerDuty Experience Frictionless

In a world that’s always on, keeping services up and running isn’t just ideal—it’s mission-critical for all of PagerDuty’s customers. It’s not lost on us that serving as the central nervous system for digital operations at some of the world’s largest companies is no small job.

Docker Compose, introduction to container orchestration with Pandora FMS

We continue with our series of articles on containers. First, we started with creating our own images with Docker Build and saw how to run them with Docker run. But today we will learn what Docker Compose is and start our journey into the world of container orchestration. Up to now we have managed containers manually and separately, which for some specific test may be valid and functional. But when the number of containers to manage starts growing, this method becomes infeasible.

Add flexibility to your data science with inference pipeline aggregations

Elastic 7.6 introduced the inference processor for performing inference on documents as they are ingested through an ingest pipeline. Ingest pipelines are incredibly powerful and flexible but they are designed to work at ingest. So what happens if your data is already ingested? Introducing the new Elasticsearch inference pipeline aggregation, which lets you apply new inference models on data that's already been indexed.

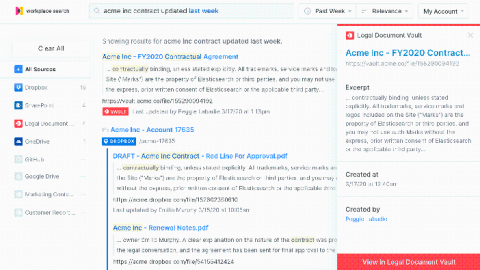

Getting started with Elastic Workplace Search on Elastic Cloud

Chances are you already spent a big part of your day looking for a document, an email, or an answer that lies deep within a Google Slides presentation. Thankfully, you landed in the right place. With Workplace Search, finding the right information across all your cloud and on-premises data platforms is now easier than ever, and it’s a few clicks closer than you expect.

DevOps/SRE Model: Bursting the Developer's Bubble. Here's the CTO Perspective.

Many organizations are transitioning toward a DevOps operational model, where software developers are responsible for operating the applications they develop, instead of a centralized IT operations group. In this “CTO Perspective” interview we talk to BigPanda’s CTO Elik Eizenberg about the challenges in that transition, and what it takes to make it easier. Lean back and watch the interview, or if you prefer reading, take a few minutes to read the transcript.

The Complete AWS Lambda Handbook for Beginners (Part 3)

Welcome to the final installment of our Complete AWS Lambda Handbook series! Given Lambda is often the central point for many serverless applications, we wanted to make sure we didn’t skip or breeze past any part. In this episode, we’re looking at some limitations and difficulties using AWS Lambda and how to overcome them, and the importance of monitoring for performance and failure remediation.

Adding Tracing with Jaeger to a TypeScript express application

I have been following distributed tracing technologies — Zipkin, OpenTracing, Jaeger, and others — for several years, without deeply trialing with any of them. Just prior to the holidays, we were having a number of those “why is this slow?” questions about an express application, written in typescript, providing an API endpoint.

Dedicated, On-Demand, Reserved and Spot- Demystifying the Terminology of AWS Instances

So you’ve got an app ready for launch, and unlike in the past where you simply ran it on-premises, this time you want to try the cloud. You know AWS is the leading cloud platform, and decide to give it a go. The first thing you’ll bump into as you learn about AWS is the various options of cloud instances available with AWS EC2.