Sponsored Post

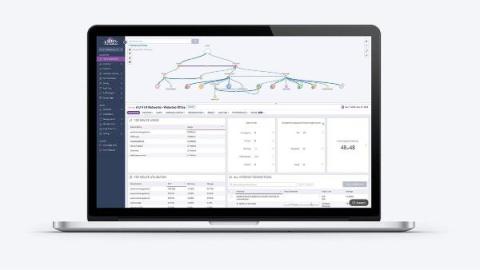

Telemetry Pipelines: Elevate Your Data Workflow with CloudFabrix

In an era where digital infrastructures are increasingly hybrid, the ability to efficiently monitor, analyze, and act on vast amounts of operational data is a significant challenge. According to Gartner, the surge in data volumes, with some workloads producing petabytes of telemetry annually, has led to heightened complexity and soaring costs—potentially exceeding $10 million annually for large enterprises.