Operations | Monitoring | ITSM | DevOps | Cloud

Analytics

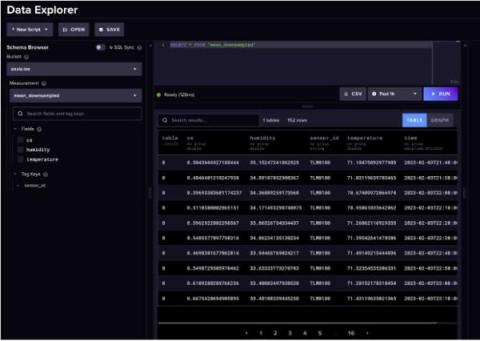

InfluxDB, Flight SQL, Pandas, and Jupyter Notebooks Tutorial

InfluxDB Cloud, powered by IOx, is a versatile time series database built on top of the Apache ecosystem. You can query InfluxDB Cloud with the Apache Arrow Flight SQL interface, which provides SQL support for working with time series data. In this tutorial, we will walk through the process of querying InfluxDB Cloud with Flight SQL, using Pandas and Jupyter Notebooks to explore and analyze the resulting data, and creating interactive plots and visualizations.

How to Use Flight SQL in Grafana

Upgrade Your IoT/OT Tech Stack: Replace Legacy Data Historians with InfluxDB

Manufacturing and industrial organizations are firmly in the era of Industry 4.0. The third wave of industrial revolution, which saw the introduction of computers, robots, and automation in industrial processes, has given way to instrumentation, and the use of advanced technologies, like machine learning (ML) and artificial intelligence (AI), using both raw and trained data, to enhance industrial processes.

Datadog on Data Engineering Pipelines: Apache Spark at Scale

Compactor: A Hidden Engine of Database Performance

This article was originally published in InfoWorld and is reposted here with permission. The compactor handles critical post-ingestion and pre-query workloads in the background on a separate server, enabling low latency for data ingestion and high performance for queries. The demand for high volumes of data has increased the need for databases that can handle both data ingestion and querying with the lowest possible latency (aka high performance).