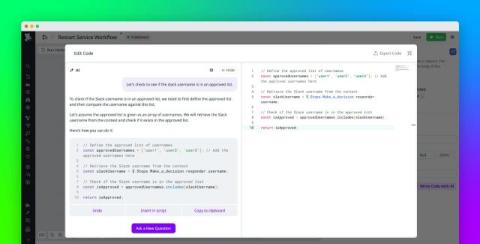

Build Datadog workflows and apps in minutes with our AI assistant

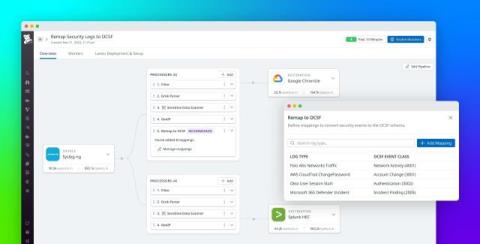

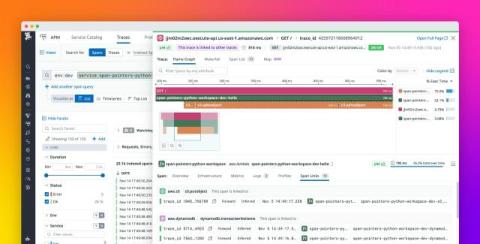

Datadog is a central hub of information—enabling you to see logs, traces, and metrics from across your stack and providing a centralized source of notifications about potential issues. However, when Datadog notifies you of an issue, you often need to log in to other applications to fully assess and resolve it, which slows down mitigation.