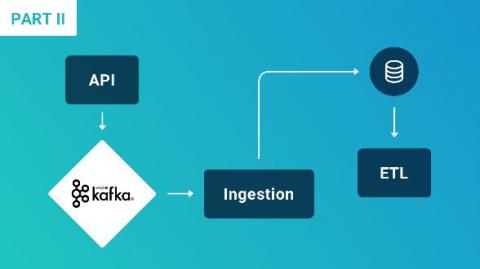

How AppSignal Monitors Their Own Kafka Brokers

Today, we dip our toes into collecting custom metrics with a standalone agent. We’ll be taking our own Kafka brokers and using the StatsD protocol to get the metrics into AppSignal. This post is for those with some experience in using monitoring tools, and who want to take monitoring to every corner of their architecture, or want to add their own metrics to their monitoring setup.