The Great Irony of Serverless Computing

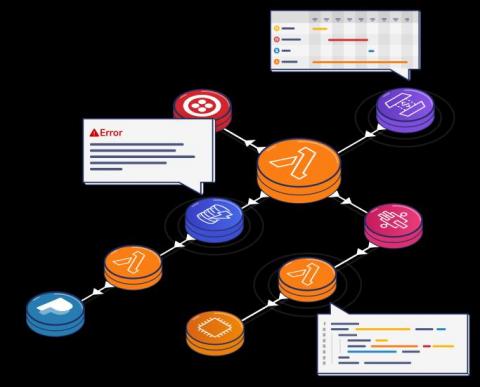

Working with Serverless computing is like riding an electric bike. You get speed, flexibility, automatic assistance to scale with ease. Development is usually hassle-free because you can focus on code and only pay for usage of the service. Except when your users hit an error. Debugging that issue feels like your bike’s battery just died while climbing a steep hill.