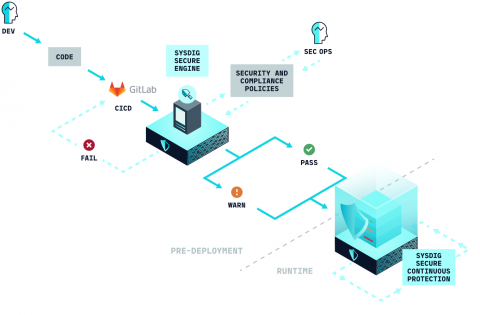

Integrating Gitlab CI/CD with Sysdig Secure

In this blog post we are going to cover how to perform Docker image scanning on the Gitlab CI/CD platform using Sysdig Secure. Container images that don’t meet the security policies that you define within Sysdig Secure will be stopped, breaking the build pipeline before being pushed to your production Docker registry.