Operations | Monitoring | ITSM | DevOps | Cloud

Logging

The latest News and Information on Log Management, Log Analytics and related technologies.

Structured logging best practices

BindPlane Agent Resiliency

Automatic log level detection reduces your cognitive load to identify anomalies at 3 am

Introducing Personalized Service Health: Upleveling incident response communications

Personalized Service Health sends custom granular alerts about Google Cloud service disruptions, and integrates with incident management tooling.

Dark Data: Discovery, Uses, and Benefits of Hidden Data

Data Lakes Explored: Benefits, Challenges, and Best Practices

Send your logs to multiple destinations with Datadog's managed Log Pipelines and Observability Pipelines

As your infrastructure and applications scale, so does the volume of your observability data. Managing a growing suite of tooling while balancing the need to mitigate costs, avoid vendor lock-in, and maintain data quality across an organization is becoming increasingly complex. With a variety of installed agents, log forwarders, and storage tools, the mechanisms you use to collect, transform, and route data should be able to evolve and adjust to your growth and meet the unique needs of your team.

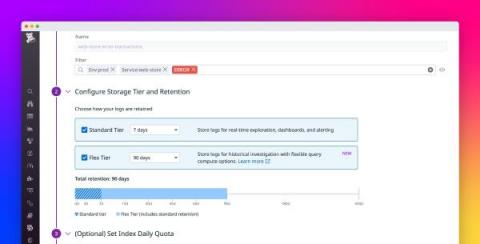

Store and analyze high-volume logs efficiently with Flex Logs

Leveraging Git for Cribl Stream Config: A Backup and Tracking Solution

Having your Cribl Stream instance connected to a remote git repo is a great way to have a backup of the cribl config. It also allows for easy tracking and viewing of all Cribl Stream config changes for improved accountability and auditing. Our Goal: Get Cribl configured with a remote Git repo and also configured with git signed commits. Git signed commits are a way of using cryptography to digitally add a signature to git commits.