Operations | Monitoring | ITSM | DevOps | Cloud

Logging

The latest News and Information on Log Management, Log Analytics and related technologies.

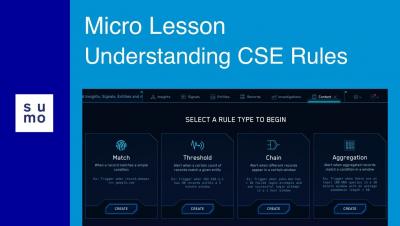

Microlesson: Understanding CSE Rules

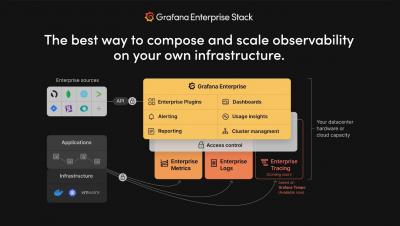

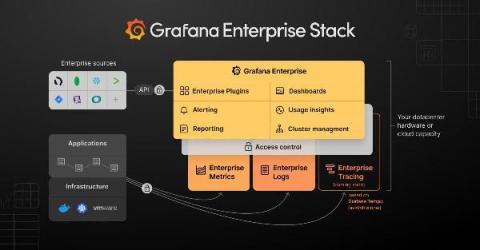

Grafana Enterprise Logs - First Look

Logz.io Celebrates the Release of OpenTelemetry v.1.0

OpenTelemetry 1.0 (Otel) is finally here (in fact, 1.0.1). The announcement brings the industry closer to a standard for observability. OpenTelemetry v1.0.1 will focus solely on tracing for now, but work continues on integrations for metrics and logs. We are still a long way off from this vision becoming reality. Metrics today are in beta, and this is where the community focus is being applied. Logging is even earlier in its life lifecycle.

Advanced Link Analysis: Part 1 - Solving the Challenge of Information Density

Link Analysis is a data analysis approach used to discover relationships and connections between data elements and entities. This is a very visual and interactive technique that can be done in the Splunk platform – and is almost always driven by a person, an analyst or investigator, to understand the data and discover necessary insights specific to the business problem at hand.

Introducing Grafana Enterprise Logs, a core part of the Grafana Enterprise Stack integrated observability solution

Today, we are launching a new Grafana Labs product, Grafana Enterprise Logs. Powered by the Grafana Loki open source project for cloud native log aggregation, and built by the maintainers of the project, this offering is an exciting addition to our growing self-managed observability stack tailored for enterprises.

An Intro to PromQL: Basic Concepts & Examples

PromQL, short for Prometheus Querying Language, is the main way to query metrics within Prometheus. You can display an expression’s return either as a graph or export it using the HTTP API. PromQL uses three data types: scalars, range vectors, and instant vectors. It also uses strings, but only as literals. This intro will provide basic PromQL examples and concepts to understand as you get used to Prometheus queries.

The essential config settings you should use so you won't drop logs in Loki

In this post, we’re going to talk about tips for securing the reliability of Loki’s write path (where Loki ingests logs). More succinctly, how can Loki ensure we don’t lose logs? This is a common starting point for those who have tried out the single binary Loki deployment and decided to build a more production-ready deployment. Now, let’s look at the two tools Loki uses to prevent log loss.

Three ways tight integration makes logging and monitoring easier

Driving productivity of software development and delivery teams is critical for any organization. The six years of research by DevOps Research and Assessment (DORA) showcases the role easy-to-use tooling plays in driving this productivity and in turn a better work/life balance for the team. The research finds that highest performing teams are 1.5x more likely to have tools they consider easy to use.

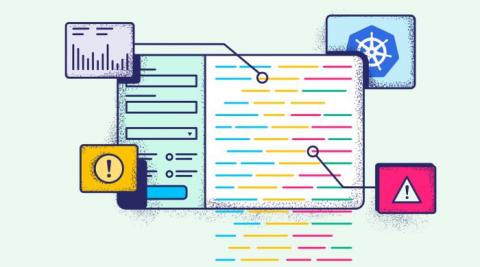

The Coralogix Operator: A Tale of ZIO and Kubernetes

As our customers scale and utilize Coralogix for more teams and use cases, we decided to make their lives easier and allow them to set up their Coralogix account using declarative, infrastructure-as-code techniques. In addition to setting up Log Parsing Rules and Alerts through the Coralogix user interface and REST API, Coralogix users are now able to use modern, cloud-native infrastructure provisioning platforms.