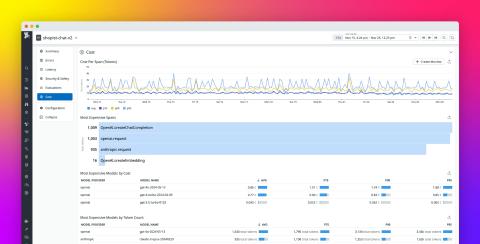

Monitor your OpenAI LLM spend with cost insights from Datadog

Managing LLM provider costs has become a chief concern for organizations building and deploying custom applications that consume services like OpenAI. These applications often rely on multiple backend LLM calls to handle a single initial prompt, leading to rapid token consumption—and consequently, rising costs. But shortening prompts or chunking documents to reduce token consumption can be difficult and introduce performance trade-offs, including an increased risk of hallucinations.