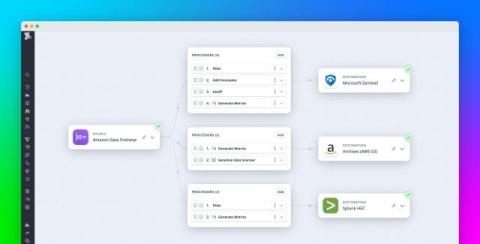

Increase control and reduce noise in your AWS logs using Datadog Observability Pipelines

Today’s SRE and security operations center (SOC) teams often find themselves overwhelmed by the sheer volume and variety of logs generated by critical AWS services such as VPC Flow Logs, AWS WAF, and Amazon CloudFront. While these logs can be valuable for detecting and investigating security threats, as well as troubleshooting issues in your environment, managing them at scale can be challenging and costly.