Operations | Monitoring | ITSM | DevOps | Cloud

Messaging

8 Real-World MQTT Use Cases

MQTT is becoming the standard protocol for applications that operate in environments where network connectivity is intermittent or unreliable, reducing bandwidth usage is a priority, or where hardware resources are limited. In this post you will learn about some specific use cases where businesses are seeing value from making MQTT part of their tech stack.

Modernising applications? Integration with APIs is the way to go

Today’s enterprises can relate to this logic, especially concerning digital transformation projects. They have broken systems that need fixing, but they also spend a lot of effort fixing systems that don’t necessarily need those levels of intervention. Specifically, companies often face a dilemma: digital modernisation requires apps and systems to be upgraded and operate in the new technology norms. Yet those same systems often exist for good reasons.

How to deploy a React app to Kubernetes using Docker

The concept of containerization helps you run applications as lightweight virtual machines. As a web developer, setting up local development environments can be tiresome. However, using tools like Docker and Kubernetes gives developers an upper hand to quickly set up and deploy applications. This guide uses Docker to deploy a React app to Kubernetes.

Blockchain sanctions and the future of open source - Open Source Matters

Integration with Apache Kafka

You can integrate Edge Flow Manager (EFM) with Apache Kafka and forward agent heartbeats to defined Kafka topics. Learn how to perform the integration with Apache Kafka. To integrate EFM with Kafka, you need to configure Kafka and EFM properties. EFM supports the forwarding of agent heartbeats and acknowledges messages exchanged on the C2 protocol between the EFM server and MiNiFi agents.

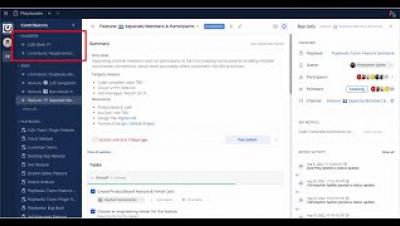

Mattermost v7.3 is now available

Mattermost v7.3 is generally available today. The following new features are included.

How to Break Stuff with Chaos Engineering and Chaos Mesh

In 2011, a Netflix engineering team introduced the concept of chaos engineering with its release of Chaos Monkey. This was initially an in-house tool developed to orchestrate fault injection that Netflix eventually made open source. However, the reliance of Chaos Monkey on Spinnaker, another Netflix engineering innovation, establishes some limitations.

How to use OpenTelemetry for Kafka Monitoring

Apache Kafka is a high-throughput, low-latency platform for handling real-time data feeds. Its storage layer is in essence a massively scalable pub/sub message queue designed as a distributed transaction log. It can be used to process streams of data in real-time, building up a commit log of changes. Kafka has strong ordering guarantees that enable it to handle all sorts of dataflow patterns including very low latency messaging and efficient multicast publish / subscribe.

What is Distributed Tracing vs OpenTelemetry?

There are a few key differences between distributed tracing and OpenTelemetry. One is that OpenTelemetry offers a more unified approach to instrumentation, while distributed tracing takes a more granular approach. This means that OpenTelemetry can be less time-consuming to set up, but it doesn’t necessarily offer as much visibility into your system as distributed tracing does.