Operations | Monitoring | ITSM | DevOps | Cloud

Featured Posts

How To Reduce Incident Tickets

In IT environments, incidents happen all the time and it's impossible to prevent all of them. Regardless of the available software solutions or the level of technical training of both users and developers, no organization is immune to incidents. The increased dependence on IT infrastructure to provide core services means that any disruption in IT services can cause any organization significant financial and reputational harm. For example, IT service providers need to resolve customer support tickets following the service-level agreements (SLAs), and failing to do so makes them liable for breaching such agreements.

What are Runbooks? And why are they needed?

Imagine being an Ops engineer in a team just struck by tragedy. Alarms start ringing, and incident response is in full force. It may sound like the situation is in control. WRONG! There's panic everywhere. The on-call team is scrambling for the heavenly door to redemption. But, the only thing that doesn't stop - Stakeholder Inquiries. This situation is bad. But it could be worse. Now imagine being a less-experienced Ops engineer in a relatively small on-call team struck by tragedy. If you don't have sufficient guidance, let alone moral support- you're toast.

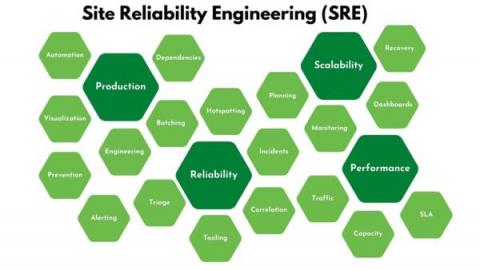

Site Reliability Engineering: Definition, Principles & How It Differs From DevOps

Site crashes and outages can cost hundreds of thousands in lost revenue and inconvenience users. Site Reliability Engineering helps build highly reliable and scalable systems, particularly important for companies that depend on their software to support their customers performing critical operations. Hiring a Site Reliability Engineer is the best way to ensure a software system stays up and running at all times. Not only will they help manage infrastructure and applications, but they'll also be able to advise on how to scale a business as it grows - keeping downtime and incidents at a minimum!

5 Solutions for a Complex Tech Stack

Modern Observability and Digital Transformation

For most businesses, effective digital transformation is a key strategic objective, and as computing infrastructure grows in complexity, end-to-end observability has never been more important to this cause. However, the amount of data and dynamic technologies required to keep up with demand only continues to increase, and current tools are not equipped to handle it- with any discrepancies resulting in rising costs and reduced competitiveness.

Datadog & Speedscale: Improve Kubernetes App Performance

By combining traffic replay capabilities from Speedscale with observability from Datadog, SRE Teams can deploy with confidence. It makes sense to centralize your monitoring data into as few silos as possible. With this integration, Speedscale will push the results of various traffic replay conditions into Datadog so it can be combined with the other observability data. Being able to preview application performance by simulating production conditions allows better release decisions. Moreover, a baseline to compare production metrics can provide even earlier signals on degradation and scale problems. Speedscale joined the Datadog Marketplace so customers can shift-left the discovery of performance issues.

An Introduction to Automation Basics

Automation is a powerful tool. With some foresight and a little elbow grease, you can save hours, days, or even months of work by strategically automating repetitive tasks. What makes automation particularly beneficial is that it eliminates manual interaction with multiple systems. Rather than manually uploading data to an event response system or notifying key support personnel of an incident, tying these tasks together through automation can reduce critical time and help resolve problems faster and more efficiently. But, before we can fill in the gaps between all of the platforms we are responsible for, we first need to understand how data moves around on the web and how we can use that process to our advantage.