Operations | Monitoring | ITSM | DevOps | Cloud

Logging

The latest News and Information on Log Management, Log Analytics and related technologies.

A Tale of Two Realities: Do Your Execs Know What It Takes to Manage ELK?

We’ve all experienced it – executives with unrealistic expectations who vastly underestimate the amount of time our work can take. Most of us assume that to be the exception and not the norm. But when it comes to monitoring and troubleshooting, that seems to be the all too commonplace.

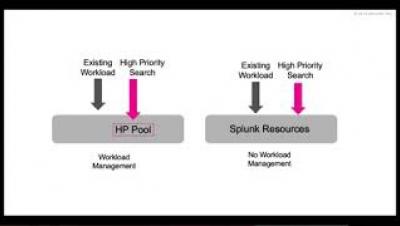

High Priority Search Execution with Splunk Workload Management

Deploying the ELK Stack on Kubernetes with Helm

ELK and Kubernetes are used in the same sentence usually in the context of describing a monitoring stack. ELK integrates natively with Kubernetes and is a popular open-source solution for collecting, storing and analyzing Kubernetes telemetry data. However, ELK and Kubernetes are increasingly being used in another context — that of a method for deploying and managing the former.

Looker - A single source of truth in multi-source world

We Live in an Intelligence Economy - Illuminate 2019 recap

What a pleasure it was to see many of our customers at our Illuminate user conference, September 11-12. We had record attendance from customers, influencers, and partners. Our time was packed with keynotes, customer presentations (35 customer breakout sessions), certifications, sharing best practices, and time networking and having fun together.

Lighten Up! Easily Access & Analyze Your Dark Data

Jim Barksdale, former CEO of Netscape, once said “If we have data, let’s look at data. If all we have are opinions, let’s go with mine.” While Jim may have said this in jest, the exponential boom in data collection indicates that we increasingly prefer to rely on facts rather than conjecture when making business decisions. More data yields greater insights about customer preferences and experiences, internal processes, and security vulnerabilities — just to name a few.

How to Manage Linux Logs

Log files in Linux often contain information that can assist in tracking down the cause of issues hampering system or network performance. If you have multiple servers or levels of IT architecture, the number of logs you generate can soon become overwhelming. In this article, we’ll be looking at some ways to ease the burden of managing your Linux logs.

Service Levels--I Want To Buy A Vowel

Everything old is new again–that’s a universal law! In our industry, we have talked about Service Level Agreements (SLAs) for a long time. At Sumo, we are proud to continue to be the only Machine Data Analytics Platform as a Service that commits to a query performance SLA.

Parsing Log Files With Graylog - Ultimate Guide

Log file parsing is the process of analyzing log file data and breaking it down into logical syntactic components. In simple words - you’re extracting meaningful data from logs that can be measured in thousands of lines. There are multiple ways to perform log file parsing: you can write a custom parser or use parsing tools and/or software.