Operations | Monitoring | ITSM | DevOps | Cloud

Serverless

The latest News and Information on Serverless Monitoring, Management, Development and related cloud technologies.

Live Dashboard for Azure Serverless Applications

Cold starts get the cold shoulder - Provisioned Concurrency has changed the game

Among the many, MANY announcements at re:Invent this year is one that settles a years-long debate or concern amongst people considering using AWS Lambda to build serverless applications: cold starts. AWS Lambda is now 5 years old. For all of that time, there’s been a concern about latency the first time a function is called. Well, fret no more: with Provisioned Concurrency you can pay a tiny fee to know Lambda functions are always available.

From "Secondary Storage" To Just "Storage": A Tale of Lambdas, LZ4, and Garbage Collection

When we introduced Secondary Storage two years ago, it was a deliberate compromise between economy and performance. Compared to Honeycomb’s primary NVMe storage attached to dedicated servers, secondary storage let customers keep more data for less money. They could query over longer time ranges, but with a substantial performance penalty; queries which used secondary storage took many times longer to run than those which didn’t.

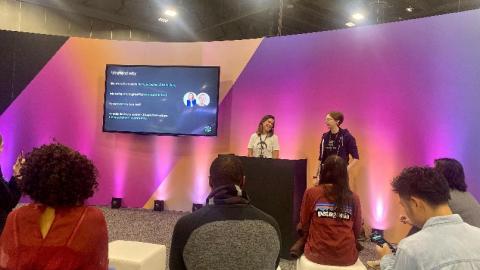

re:Invent Serverless Talks - Collaboration, Community, & Career Development

At re:Invent 2019, Farrah and I gave a talk on our paths into the tech industry, how serverless helped us both build some of our first products, and how serverless represents a new mindset. I’d wanted to share a version of that talk on our blog for those who couldn’t make it, though we hope to give it again in the near future.

Stackery User Stories: Matt Wallington, CTO of Sightbox @ AWS re:Invent

AWS re:Invent 2019 - API Gateway HTTP Proxy

API Gateway is a serverless service by AWS to expose cloud services through private or public HTTPs endpoints. It is used by many serverless teams to connect frontend applications to backend systems in a secure, scalable and seamless way. API Gateway integrates with Lambda, DynamoDB, S3 and a variety of other AWS services. The main issue with API Gateway, so far, was its cost. At $3.50 per million requests, it can be more expensive than Lambda itself.

AWS re:Invent 2019 - Serverless Announcements Recap

We did a compilation of all announcements from the AWS re:Invent 2019 that are relevant for Serverless teams, broken down by services.

Why Cassandra Serverless Is a Game-Changer

Up until now, DynamoDB has been the only option of a truly serverless database battle-tested for production environments. Especially after launching the on-demand throughput capacity optimization, is a perfect-fit database engine for serverless projects.

How to monitor Lambda functions

As serverless application architectures have gained popularity, AWS Lambda has become the best-known service for running code on demand without having to manage the underlying compute instances. From an ops perspective, running code in Lambda is fundamentally different than running a traditional application. Most significantly from an observability standpoint, you cannot inspect system-level metrics from your application servers.