Operations | Monitoring | ITSM | DevOps | Cloud

Cloud

The latest News and Information on Cloud monitoring, security and related technologies.

How to Monitor Azure VM Scale Sets

With Azure Virtual Machine (VM) Scale Sets you can automatically scale the number of VMs running an application based on the compute resources required. VM Scale Sets make it easier to deploy and manage a large number of Virtual Machines consistently and allow you to use and pay for the minimum resources needed at any given time, but they also introduce a few monitoring challenges.

Containerizing Legacy Windows Applications

There should be no doubt anymore that containers are revolutionizing the world of application development and leading the charge for purpose-built cross-cloud and hybrid-cloud topologies. There are other virtualization platforms that solved problems in server consolidation and data center optimization, but in the new world of cloud and mobility, proprietary monolithic middleware may have had its day.

The Need for Security-Specific Applications

When we talk about cloud providers, we often forget that not all data is the same — even in the same application, while we might think of this data as from a “financial application” or a “computation process”, the reality is that each data set has subsets upon subsets, and thus require specific applications to manage them.

Stackery App One-Minute Demo

A Greater Gatsby: Modern, Static-Site Generation

Gatsby is currently generating a ton of buzz as the new hot thing for generating static sites. This has lead to a number of frequent questions like: A static…what now? How is GraphQL involved? Do I need to set up a GraphQL server? What if I’m not a great React developer, really more of a bad React developer?

Announcing Cloud Data Encryption for Opsgenie

Opsgenie Edge Encryption is a new feature that makes it easy to secure sensitive data and meet compliance requirements while using Opsgenie for alerting and incident management. Edge Encryption secures data before it leaves your environment, you manage the encryption keys, and the experience is seamless for users. Atlassian has no access to the encrypted data and neither do potential attackers.

Introduction to the Integration Module for Data Manipulation

Creating Cognito User Pools with CloudFormation

I’ve been working on creating AWS Cognito User Pools in CloudFormation, and thought this would be a good time to share some of what I’ve learned.

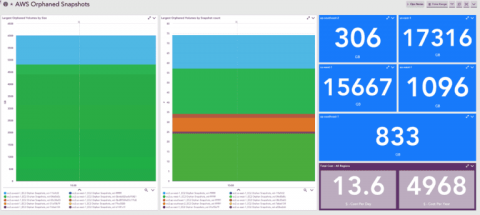

How to Identify Orphaned EBS Snapshots to Optimize AWS Costs

So a while back I got an email from our finance team. I was tasked to assist with tagging resources in our AWS infrastructure and investigate which items are contributing to certain costs. I don’t know about other engineers, but these kinds of tasks are on the same realm of fun as … wiping bird poop off your windshield at a gas station. So I did the sanest thing I could think of.