Operations | Monitoring | ITSM | DevOps | Cloud

Latest News

Goliath Partners with Automai for App Performance Monitoring

IPhiladelphia, PA and Los Angeles, CA – August 25, 2020 – Goliath Technologies, a leader in end-user experience monitoring and troubleshooting software, and Automai, specialized in application monitoring, today announced their partnership to extend Goliath’s solution to provide visibility into users’ experiences working within applications.

Open Source Grafana Tutorial: Getting Started

Open source grafana is one of the most popular OSS UI for metrics and infrastructure monitoring today. Capable of ingesting metrics from the most popular time series databases, it’s an indispensable tool in modern DevOps. This OSS grafana tutorial will go over installation, configuration, queries, and initial metrics shipping. Open source grafana is the equivalent of what Kibana is for logs (for more, see Grafana vs. Kibana).

Best Certifications for IT Professional Careers

The job market for IT professionals right now is challenging. Whether you’re seeking your first job in IT or looking to further your career into a more pronounced and distinguished role, certifications serve as a way to separate from the crowd of applicants. Certifications show a functional level of proficiency, often making them more valuable than college degrees in certain entry-level positions, and just as valuable as years of experience in more established roles.

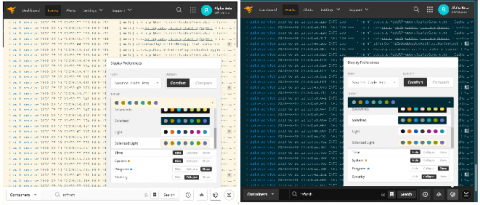

3 tips to improve your Grafana dashboard design

Every Grafana user is a dashboard designer. The Grafana community gladly shares their dashboards, so there’s tons of inspiration available. Chances are you’ve downloaded some community dashboards and tweaked them in search of patterns that work for you. But if you haven’t found them, you’re not alone! In my Aug. 27 webinar, “A beginner’s guide to dashboard design,” I’ll cover the basics of good dashboard design.

6 Things to consider in a Prometheus monitoring platform

Organizations are turning in droves to Prometheus to monitor their container and microservice estates, but larger companies often run headlong into a wall: They face scaling challenges when they move beyond a handful of apps.

How to create an Azure storage lifecycle management policy

Whether you are using our Cloud Storage Management software to gain insights into your Azure storage environment, or are just trying to work out how to save costs within Azure, creating a lifecycle management policy is a great idea to help you save in your Azure storage costs.

Web Performance Profiling: Nike.com

Google has long used website performance as a ranking criteria for search results. Despite the importance of page experience for SEO, many sites still suffer unacceptable load times. Poor performance is often a confluence of factors: slow time to first byte, hundreds of resource requests, and way too much JavaScript.

Get paid to write open source software working from home

Open source companies are amazing places to work for engineers. Your work is showcased to the world through private open source companies like GitLab and HashiCorp (makers of Terraform and Vault), and through public ones like Elastic, GitHub, and RedHat—all of which have enormous impact.