Operations | Monitoring | ITSM | DevOps | Cloud

Latest News

Accelerate your logs investigations with Watchdog Insights

If you’re investigating an incident, every minute means degraded performance or even downtime for customers. The causes of an issue often come from parts of your systems and applications that you would not think to check, and the sooner you can bring these to light, the better.

Analyze JMX to Better Assess The Health Of Your Java Applications

Exploring the Value of your Google Cloud Logs and Metrics

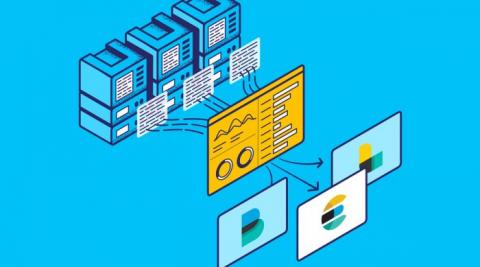

With our ability to ingest GCP logs and metrics into Splunk and Splunk Infrastructure Monitoring, there’s never been a better time to start driving value out of your GCP data. We’ve already started to explore this with the great blog from Matt here: Getting to Know Google Cloud Audit Logs. Expanding on this, there’s now a pre-built set of dashboards available in a Splunkbase App: GCP Application Template for Splunk!

Doubling Down: What It's Like Contributing to Open Source at Logz.io

Logz.io has always prided itself as a company pushing the use of open source tech. As we have moved to expand our reach with metrics and traces over the past year and a half, we have doubled down on our own contributions to the community. With (distributed) traces in particular, we have been able to forge ahead. Our relationship with the teams at Jaeger and OpenTelemetry have really blossomed (and we are kind of proud to have supported the latter in the run-up to the OpenTelemetry v1.0 release).

Metricbeat Deep Dive: Hands-On Metricbeat Configuration Practice

Metricbeat, an Elastic Beat based on the libbeat framework from Elastic, is a lightweight shipper that you can install on your servers to periodically collect metrics from the operating system and from services running on the server. Everything from CPU to memory, Redis to NGINX, etc… Metricbeat takes the metrics and statistics that it collects and ships them to the output that you specify, such as Elasticsearch or Logstash.

All together now: Bringing your GKE logs to the Cloud Console

Troubleshooting an application running on Google Kubernetes Engine (GKE) often means poking around various tools to find the key bit of information in your logs that leads to the root cause. With Cloud Operations, our integrated management suite, we’re working hard to provide the information that you need right where and when you need it. Today, we’re bringing GKE logs closer to where you are—in the Cloud Console—with a new logs tab in your GKE resource details pages.

New in Grafana 7.4: Export usage data to Loki to help manage dashboard sprawl and troubleshoot faster

We first released the usage insights Enterprise feature in Grafana 7.0 based on feedback from customers that they would like to better understand how their users are interacting with Grafana, including the dashboards they visit, the information they query, and where they run into issues. What we learned was that dashboard sprawl is a real issue: Administrators estimate that almost 60% of dashboards might not be used at all.

Logging Errors in Web Workers

Release 3.8.0 of the TrackJS browser agent added support for Web Workers, which adds some awesome new observability to the background tasks of your web applications. Many development teams have adopted Web Workers to their web applications to add offline support, caching, or to process heavy tasks. Workers allow web apps to feel faster by removing work from the user interface thread.

Why you should use Central Error Logging Services

Logs are vital for every application that runs in a server environment. Logs provide essential information which points to whether the current system is operating properly. Looking through logs, you will gather data on system issues, errors, and trends. However, it is not feasible to manually look up errors on various servers across thousands of log files. The solution? Central errors logging services.