Operations | Monitoring | ITSM | DevOps | Cloud

Tracing

The latest News and Information on Distributed Tracing and related technologies.

NodeJS Instrumentation - Capturing Performance of External Services | Datadog Tips & Tricks

Debugging, distributed tracing, and profiling for web applications

NodeJS Instrumentation - Adding Custom Tags to Spans | Datadog Tips & Tricks

Jaeger Turns Five: A Tribute to Project Contributors

August 3rd, 2015 was the date of the first commit in the internal Jaeger repository at Uber. Technically, the true birthday of the project was probably a week or so earlier, because while I was prototyping the collector service we went through a number of project names, some of them rather embarrassing to name here, and the real first commits happened in a differently named repository.

From 0 to Insight with OpenTelemetry in Go

Curious how to get data flowing from your Go application into Honeycomb using OpenTelemetry? This guide will help you step by step through adding OpenTelemetry into your web service and sending the data to Honeycomb.

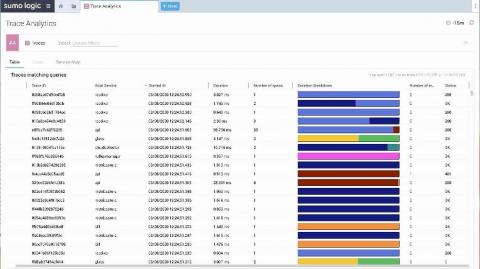

Introducing the Sumo Logic Observability suite with distributed tracing (beta) - a cornerstone of cloud-native APM

Jaeger Essentials: Best Practices for Deploying Jaeger on Kubernetes in Production

Logs, metrics and traces are the three pillars of the Observability world. The distributed tracing world, in particular, has seen a lot of innovation in recent months, with OpenTelemetry standardization and with Jaeger open source project graduating from the CNCF incubation. According to the recent DevOps Pulse report, Jaeger is used by over 30% of those practicing distributed tracing.

Where did all my spans go? A guide to diagnosing dropped spans in Jaeger

Nothing is more frustrating than feeling like you’ve finally found the perfect trace only to see that you’re missing critical spans. In fact, a common question for new users and operators of Jaeger, the popular distributed tracing system, is: “Where did all my spans go?” In this post we’ll discuss how to diagnose and correct lost spans in each element of the Jaeger ingestion pipeline.

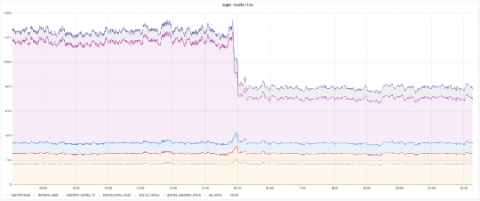

How to maximize span ingestion while limiting writes per second to Scylla with Jaeger

Jaeger primarily supports two backends: Cassandra and Elasticsearch. Here at Grafana Labs we use Scylla, an open source Cassandra-compatible backend. In this post we’ll look at how we run Scylla at scale and share some techniques to reduce load while ingesting even more spans. We’ll also share some internal metrics about Jaeger load and Scylla backend performance. Special thanks to the Scylla team for spending some time with us to talk about performance and configuration!