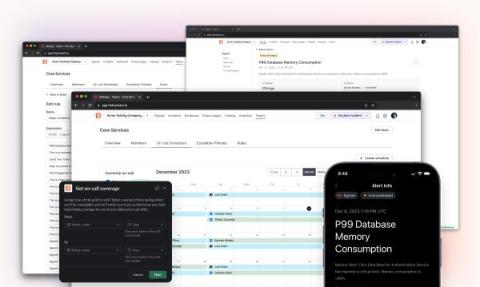

Now in beta: alerting for modern DevOps teams

Although FireHydrant has spent five years focused on what happens after your team (erg, I mean service 🙄) gets paged, the topic of alerting often comes up in discussions with our community. People are tired of paying big bucks for software that’s expensive, bloated, and hasn’t seen much innovation. Clearly, there’s a problem here – and we’re tackling it head on.