How to Monitor Host Metrics with OpenTelemetry

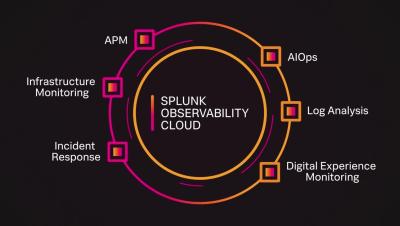

Today's environments often present the challenge of collecting data from various sources, such as multi-cloud, hybrid on-premises/cloud, or both. Each cloud provider has its own tools that send data to their respective telemetry platforms. OpenTelemetry can monitor cloud VMs, on-premises VMs, and bare metal systems and send all data to a unified monitoring platform. This applies across multiple operating systems and vendors.