Operations | Monitoring | ITSM | DevOps | Cloud

Latest Posts

3 Simple EC2 Strategies To Ensure Cost Efficiency

What is CI/CD observability, and how are we paving the way for more observable pipelines?

Observability isn’t just about watching for errors or monitoring for basic health signals. Instead, it goes deeper so you can understand the “why” behind the behaviors within your system. CI/CD observability plays a key part in that. It’s about gaining an in-depth view of the entire pipeline of your continuous integration and deployment systems — looking at every code check-in, every test, every build, and every deployment.

From Cloud to AI: The Evolution of IT Infrastructure

Captains Log: How we are leveraging CEL for Signals

As engineers, we didn't want to make Signals only a replacement for what the existing incumbents do today. We've had our own gripes for years about the information architecture many old companies still force you to implement today. You should be able to send us any signal from any data source and create an alert based on some conditions. We're no strangers to building features that include conditional logic, but we upped the ante when it came to Signals.

Track Frontend JavaScript exceptions with Playwright fixtures

Table of contents Frankly, end-to-end testing and synthetic monitoring are challenging in today’s JavaScript-heavy world. There’s so much asynchronous JavaScript code running in modern applications that getting tests stable can be a real headscratcher. That’s why many teams rely on testing mission-critical features and treat “testing the rest” as a nice to have. It’s a typical cost-effort decision.

Secure and monitor infrastructure networking with Buoyant Enterprise for Linkerd in the Datadog Marketplace

As organizations adopt Kubernetes, they face gaps in security, reliability, and observability such as unencrypted communication, lack of multi-cluster support, and missing reliability features like circuit breaking. Buoyant Cloud is the dashboarding and automated monitoring component of Buoyant Enterprise for Linkerd, which helps organizations secure and monitor communication between Kubernetes workloads.

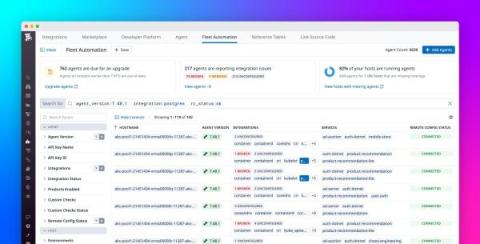

Centrally govern and remotely manage Datadog Agents at scale with Fleet Automation

As customers scale to thousands of hosts and deploy increasingly complex applications, it can be difficult to ensure that every host is configured to give you the visibility you need to monitor your infrastructure and applications. To ensure visibility across a growing number of hosts, you need to know that your observability strategy is implemented uniformly across your entire fleet of Datadog Agents installed on these hosts.

What is tool consolidation - and how can AIOps optimize it?

Tool consolidation is the process of analyzing which IT observability and monitoring tools to use, which to add, and which to retire. By carefully determining the usage and value of your current observability stack, your ITOps teams can consolidate redundant tools and those providing little value to reduce your operational costs. While the benefits of tool consolidation are clear, doing so is anything but.

Tame observability complexity: Understanding the observability tool landscape

Choosing, deploying, maintaining, and rationalizing observability and monitoring tools can be a constant challenge for ITOps, DevOps, and SRE teams. As teams monitor increasingly complex systems, the need for instrumentation that monitors those systems grows at the same rate, leading directly to a growing problem of observability data engineering, integration, and enrichment.