Operations | Monitoring | ITSM | DevOps | Cloud

Analytics

Announcing Single Sign-On (SSO) Support for CHAOSSEARCH

We are thrilled to announce that we now offer Single Sign-On (SSO) support for ALL customers on the CHAOSSEARCH platform. You can now integrate your existing identity provider with CHAOSSEACH and have your users access the platform without needing to manage a separate set of credentials.

Seeing is Believing: Announcing the DevOps Pulse 2019 with a Focus on Observability

In the world of Software Engineering, observability seems to be the talk of the town. We discuss it at conferences, read about it in blogs or articles, and see it promised to us by vendor after vendor. But what is observability? What issues have recently evolved to make it such an integral concept? What strategies are engineers employing to ensure observability? And most importantly of all, why are engineers looking to achieve it?

Running Spark with Jupyter Notebook & HDFS on Kubernetes

Kublr and Kubernetes can help make your favorite data science tools easier to deploy and manage. Hadoop Distributed File System (HDFS) carries the burden of storing big data; Spark provides many powerful tools to process data; while Jupyter Notebook is the de facto standard UI to dynamically manage the queries and visualization of results.

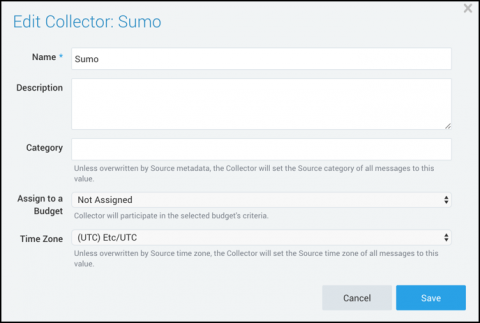

How to Monitor Fastly CDN Logs with Sumo Logic

In the last post, we talked about the different ways to monitor Fastly CDN log and why it’s crucial to get a deeper understanding of your log data through a service like Sumo Logic. In the final post of our Fastly CDN blog series, we will discuss how to use Sumo Logic to get the most insights out of your log data — from how to collect Fastly CDN log data to the various Sumo Logic dashboards for Fastly.

Monitor your customer data infrastructure with Segment and Datadog

This is a guest post by Noah Zoschke, Engineering Manager at Segment. Segment is the customer data infrastructure that makes it easy for companies to clean, collect, and control their first-party customer data. At Segment, our ultimate goal is to collect data from Sources (e.g., a website or mobile app) and route it to one or more Destinations (e.g., Google Analytics and AWS Redshift) as quickly and reliably as possible.

Monitor Apache Hive with Datadog

Apache Hive is an open source interface that allows users to query and analyze distributed datasets using SQL commands. Hive compiles SQL commands into an execution plan, which it then runs against your Hadoop deployment. You can customize Hive by using a number of pluggable components (e.g., HDFS and HBase for storage, Spark and MapReduce for execution). With our new integration, you can monitor Hive metrics and logs in context with the rest of your big data infrastructure.

How to share reports, dashboards and tables using Analytics Plus

Big Data and Kubernetes - Why Your Spark & Hadoop Workloads Should Run Containerized...(1/4)

Starting this week, we will do a series of four blogposts on the intersection of Spark with Kubernetes. The first blog post will delve into the reasons why both platforms should be integrated. The second will deep-dive into Spark/K8s integration. The third will discuss usecases for Serverless and Big Data Analytics. The last post will round off with insights on best practices.

Gartner Lists Anodot as a Leading AIOps Vendor

A recent report by Gartner casts light into the world of AIOps, and the need for deploying it in organizations today. AIOps is a modern approach to DevOps which is based on recent AI technology. Gartner’s vision of the AIOps platform is one that enables continuous insights across IT operations management.