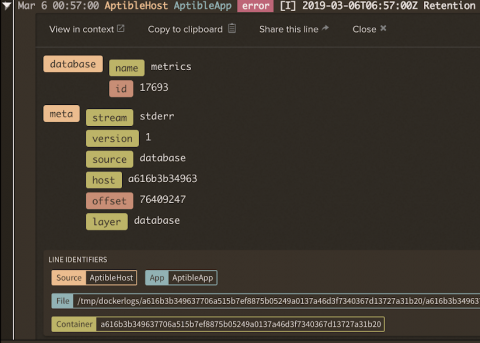

How LogDNA scales Elastic Search

I spoke at Container World 2019 in Santa Clara and shared insights on what LogDNA has learned in scaling Elastic Search using Kubernetes over the years. Here are some highlights from the talk and you can also find the slide deck below.