Operations | Monitoring | ITSM | DevOps | Cloud

Latest News

Serverless observability: Lumigo or AWS X-Ray

Observability is a measure of how well we are able to infer the internal state of our application from its external outputs. It’s an important measure because it indirectly tells us how well we’d be able to troubleshoot problems that will inevitably arise in production. It’s been one of the hottest buzzwords in the cloud space for the last 5 years and the marketplace is swamped with observability vendors. Different tools employ different methodologies for collecting data.

How Logz.io Uses Observability Tools for MLOps

Logz.io is one of Logz.io’s biggest customers. To handle the scale our customers demand, we must operate a high scale 24-7 environment with attention to performance and security. To accomplish this, we ingest large volumes of data into our service. As we continue to add new features and build out our new machine learning capabilities, we’ve incorporated new services and capabilities.

Bridge Your Data Silos to Get the Full Value from Your Observability and Security Data

In my work as a technical evangelist at Cribl, I regularly talk to companies seeing annual data growth of 45%, which is unsustainable given current data practices. How do you cost effectively manage this flood of data while generating business value from critical data assets?

Q&A from Our Recent Observability Webinar

Earlier this month I hosted the “Everything You’ve Heard About Observability is Wrong (Almost)” webinar– thanks to all of you who attended. I wanted to follow-up with the attendees as well as those who were not able to join. As promised, it wasn’t the same old Observability presentation that we have grown accustomed to you know, all marketing with little value.

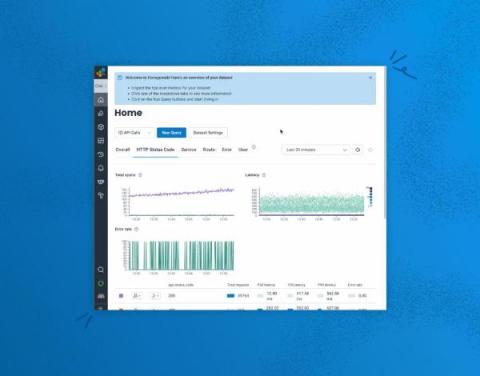

Debugging Just Got Faster and Easier With New Enhancements to BubbleUp

BubbleUp is Honeycomb’s machine-assisted debugging feature and is one of our most powerful differentiators. It leverages machine analysis to cycle through all of the attributes found in billions of rows of telemetry to surface what is in common with problematic data compared to baseline data. This explains the context of anomalous code behavior by surfacing exactly what changed when you don’t know which attributes to examine or index, dramatically accelerating the debugging process.

Where Are You In Your Observability Journey?

Observability is the ability to see and understand the internal state of a system from its external outputs. Logs, Metrics, and Traces, collectively called observability data, are three external outputs widely considered to be three pillars of observability. Now more than ever, organizations of all sizes must employ the necessary processes and technologies to harness the power of their data and make it more actionable.

Get More Out of OpenTelemetry With Honeycomb's Latest Updates

Just a few short months ago, we talked about a bunch of updates to Honeycomb’s support for OpenTelemetry. To the surprise of no one, we’ve got more updates to share!

Improve Application Reliability With 4T Monitors

StackState’s new 4T Monitors introduce the ability to monitor IT topology as it changes over time. Now your observability processes can trigger alerts on changes in topology that don’t match an ideal state, on deviations in metrics and events and on complex combinations of parameters. Monitoring topology as part of your observability efforts enriches the concept of environment health by adding the dimension of topology.

Authors' Cut-Gear up! Exploring the Broader Observability Ecosystem of Cloud-Native, DevOps, and SRE

You know that old adage about not seeing the forest for the trees? In our Authors’ Cut series, we’ve been looking at the trees that make up the observability forest—among them, CI/CD pipelines, Service Level Objectives, and the Core Analysis Loop. Today, I'd like to step back and take a look at how observability fits into the broader technical and cultural shifts in technology: cloud-native, DevOps, and SRE.