Operations | Monitoring | ITSM | DevOps | Cloud

AI

Effortless Engineering: Quick Tips for Crafting Prompts

Large Language Models (LLMs) are all the rage in software development, and for good reason: they provide crucial opportunities to positively enhance our software. At Honeycomb, we saw an opportunity in the form of Query Assistant, a feature that can help engineers ask questions of their systems in plain English.

AI Explainer: Glossary of Artificial Intelligence Terms

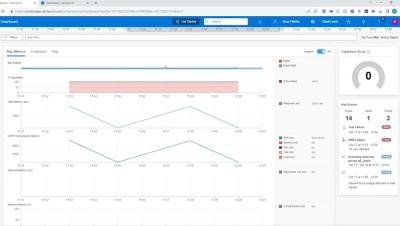

Countering Internet Complexity with AI Powered Internet Performance Monitoring

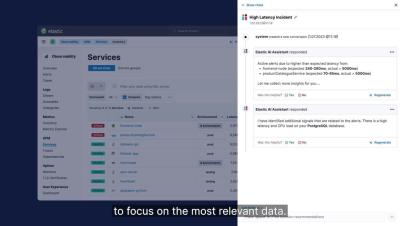

Elevating Incident Management: Leveraging automation and AI to put reliability on autopilott

OpenTelemetry for LLM's: OpenLLMetry with SigNoz and Traceloop

OpenTelemetry for AI: OpenLLMetry with SigNoz and Traceloop

Artificial Intelligence: Friend or Foe?

From the science fiction fantasies of the mid-20th century to today's reality, AI's journey has been a blend of innovation and apprehension. As we contemplate the future of AI, it’s interesting to look back at the early days of AI, how far it’s come and what we might yet expect. AI has the potential to be of huge benefit but could be disruptive in the wrong hands, particularly in the realm of cybersecurity. A Brief History and Development of AI.