Operations | Monitoring | ITSM | DevOps | Cloud

Analytics

Build a resilient cybersecurity framework by transforming your IT team into a security team

More organizations than ever before have shifted to a hybrid work culture to reduce the impact of COVID-19. This unprecedented change has not only given rise to new security challenges, but has also considerably increased the surface area available for an attack. A blend of personal and corporate endpoints in use, geographical spread of resources, and a sharp spike in the overall number of security threats have further complicated the already labor-intensive security landscape.

Elastic Contributor Program: How to submit and validate a contribution

Last month we launched the Elastic Contributor Program to recognize and reward the hard work of our awesome contributors, encourage knowledge sharing within the Elastic community, and build friendly competition around contributions. But how do you start contributing? In this blog post, we’ll walk through how to log in to the Elastic Contributor Program portal and set up your profile so you can begin submitting your own contributions and validating others’ contributions!

Add flexibility to your data science with inference pipeline aggregations

Elastic 7.6 introduced the inference processor for performing inference on documents as they are ingested through an ingest pipeline. Ingest pipelines are incredibly powerful and flexible but they are designed to work at ingest. So what happens if your data is already ingested? Introducing the new Elasticsearch inference pipeline aggregation, which lets you apply new inference models on data that's already been indexed.

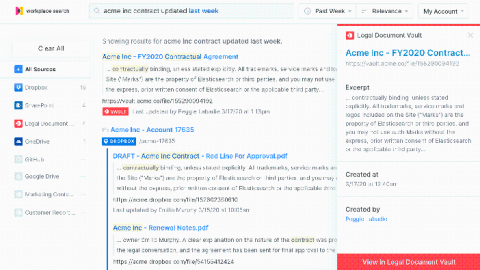

Getting started with Elastic Workplace Search on Elastic Cloud

Chances are you already spent a big part of your day looking for a document, an email, or an answer that lies deep within a Google Slides presentation. Thankfully, you landed in the right place. With Workplace Search, finding the right information across all your cloud and on-premises data platforms is now easier than ever, and it’s a few clicks closer than you expect.

Introducing the InfluxDB Template UI: Monitoring Made Simple

At InfluxData, we’re obsessed with time to awesome — how quickly can you start working productively with time series data? What can we do to make things better? InfluxDB Templates are a great example of this mindset. Back in April, we announced Templates as a way to package up everything you need to monitor a particular technology — Telegraf configurations and InfluxDB Dashboards, Tasks, Alerts, and related artifacts — into a single configuration file.

Investigative analysis of disjointed data in Elasticsearch with the Siren Platform

At Siren, we build a platform used for “investigative intelligence” in Law Enforcement, Intelligence, and Financial Fraud. Investigative intelligence is a specialisation of data analytics that serves the needs of those that are typically hunting for bad actors. Such investigations are the primary focus of law enforcement and intelligence, but are also critical to uncovering financial crime activities and for threat hunting in cybersecurity.

How We're Cutting $360K From Anodot's Annual Cloud Costs

With the pandemic forcing businesses worldwide to reboot, many have no choice but to exact drastic cost-cutting measures to keep the lights on. Cloud computing is an expense incurred by every digital business that, unlike many other operating costs, is largely variable.

TL;DR InfluxDB Tech Tips - From Subqueries to Flux!

In this post we translate subqueries, using InfluxQL in InfluxDB version 1.x, into Flux, a data scripting and functional query language in InfluxDB version 1.8 and greater in either OSS or Cloud. The subqueries translated here come from this blog. This blog assumes that you have a basic understanding of Flux. If you’re entirely unfamiliar with Flux, I recommend that you check out the following documentation and blogs.

MLTK Smart Workflows

I’m excited to announce the launch of a new series of apps on Splunkbase: MLTK Smart Workflows. These apps are domain-specific workflows, built around specific use cases, that can be used to help you develop a set of machine learning models with your data. In this blog post, I’d like to take you through the process we adopted for developing the workflows.