Operations | Monitoring | ITSM | DevOps | Cloud

Logging

The latest News and Information on Log Management, Log Analytics and related technologies.

Artifactory & Xray Logging Analytics with FluentD, ElasticSearch and Kibana

OpenTelemetry, Open Collaboration

OpenTelemetry — the merger of OpenCensus and OpenTracing — appeared in May of 2019, led by companies like Omnition (now a part of Splunk), Google, Microsoft, and others who are pushing the curve on observability. OpenTelemetry is a project within the Cloud Native Computing Foundation (CNCF) that has gathered contributors and supporters far and wide, becoming one of the most active projects found in open source today. It’s currently #2 behind only Kubernetes!

What's New in Logz.io with Infrastructure Monitoring? Upgrading to Open Source Grafana 7

Logz.io Infrastructure Monitoring just got some substantive upgrades to kick off the summer. The major change is the Grafana 7 update, specifically to version 7.0.3. This includes several new features. The Logz.io deployment will also include applying Grafana to logs. The Transform tab gives users the option to quickly add non-time series data to tables and then pair it with other data (time series or not) in your Metrics deployment.

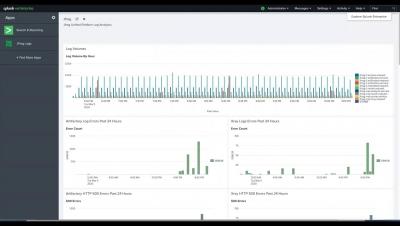

SAI Something Linux: Monitoring Linux with Splunk App for Infrastructure

Metrics and logs go together like cookies and milk. Metrics tell you when you have a problem, and logs/events often tell you why that problem happened. But it’s always been harder than it needed to be to get both types of data onto a single screen, especially when the sysadmins using the tools aren’t necessarily daily experts in managing those monitoring platforms.

Introduction into Eland - DataFrames and Machine Learning backed by Elasticsearch

Logging Best Practices in the CI/CD Era

Log aggregation and the journey to optimized logs

Ever experienced bad logging- whether it’s the wrong log, the wrong information, or a multitude of other logging woes? We aren’t able to count the number of times anymore that we’ve happily gone and set log lines, only to find out that it was all for naught. The frustrations are endless. What is meant to be magic for your code, the ultimate savior when debugging, has become the ultimate frustration.

Logging Best Practices Part 2: General Best Practices

Isn’t all logging pretty much the same? Logs appear by default, like magic, without any further intervention by teams other than simply starting a system… right? While logging may seem like simple magic, there’s a lot to consider. Logs don’t just automatically appear for all levels of your architecture, and any logs that do automatically appear probably don’t have all of the details that you need to successfully understand what a system is doing.

Diagnosing out-of-memory errors on Linux

Out-of-memory (OOM) errors take place when the Linux kernel can’t provide enough memory to run all of its user-space processes, causing at least one process to exit without warning. Without a comprehensive monitoring solution, OOM errors can be tricky to diagnose. In this post, you will learn how to use Datadog to diagnose OOM errors on Linux systems.