Operations | Monitoring | ITSM | DevOps | Cloud

Synthetics

10 Best Synthetic Monitoring & Testing Tools of 2020: Pros & Cons Comparison

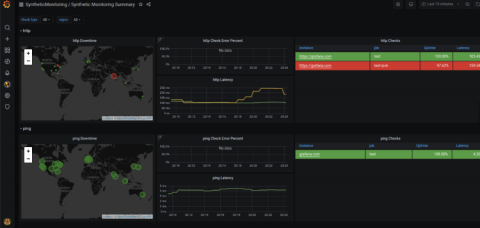

Synthetic monitoring has been a staple of performance testing for years now and it’s probably going to stay that way for quite some time. Also called proactive monitoring, this relatively simple way of keeping track of the performance of your website, and is your safest bet to ensure you can rise to your visitors’ expectations.

The Next Generation of Synthetic Monitoring

Site24x7 Synthetic transaction monitoring using a real browser

Intro to synthetic monitoring - and Grafana Labs' new iteration on worldPing

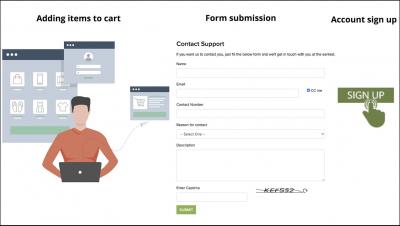

Often there’s a focus on how a service is running from the perspective of the organization. But what does service health monitoring look like from the perspective of a user? Today, understanding your end users’ experience is a key component of ensuring your website or application is functioning correctly. Having a website that is performing well regardless of location, load, or connection type is no longer a nice-to-have, but rather a requirement.

Boost CDN Performance by 20-40% with Catchpoint Synthetic Monitoring

Content Distribution Networks (CDN) have been around for more than two decades, and as per Intricately 2019 report, there are over a million companies leveraging CDN services from across the world. In the chart below, they have compared four popular CDNs but there are several other major CDN providers such as Verizon Media (Edgecast) and Lumen. Large enterprises such as Linkedin, Ebay, Walmart, and others have already implemented a multi-CDN approach to power all their applications.

Managing Digital Experience Using Synthetic Monitoring

IT monitoring and management have traditionally been focused on an enterprise’s IT backbone; e.g. its data centers, servers, networks, etc. However, with more and more employees working from home these days, and customers or partners scattered around the world, organizations have all found it is critical to monitor and manage an extended network connection to ensure a supreme digital experience for their employees, customers, or partners.

Incorporate Datadog Synthetic tests into your CI/CD pipeline

Testing within the CI/CD pipeline, also known as shift-left testing, is a devops best practice that enables agile teams to continually assess the viability of new features at every stage of the development process. Running tests early and often makes it easier to catch issues before they impact your users, reduce technical debt, and foster efficient, cross-team collaboration.

Website Benchmarking: An Example on How to Benchmark Performance Against Competitors

Time to first byte, first contentful paint, DNS response time, round-trip time, and the list goes on and on. With all of these metrics, how are you supposed to know which are the most important ones that you should monitor? To understand what those numbers are supposed to look like, you’ll have to get a reference point. Something that’s supposed to give you a starting point.

Best practices for maintaining end-to-end tests

In Part 1, we looked at some best practices for getting started with creating effective test suites for critical application workflows. In this post, we’ll walk through best practices for making test suites easier to maintain over time, including: We’ll also show how Datadog can help you easily adhere to these best practices to keep test suites maintainable while ensuring a smooth troubleshooting experience for your team.